In the last tutorial we learned how to leverage the Scrapy framework to solve common web scraping tasks. Today we are going to take a look at Selenium (with Python ❤️ ) in a step-by-step tutorial.

Selenium refers to a number of different open-source projects used for browser automation. It supports bindings for all major programming languages, including our favorite language: Python.

The Selenium API uses the WebDriver protocol to control web browsers like Chrome, Firefox, or Safari. Selenium can control both, a locally installed browser instance, as well as one running on a remote machine over the network.

Originally (and that has been about 20 years now!), Selenium was intended for cross-browser, end-to-end testing (acceptance tests). In the meantime, however, it has been adopted mostly as a general browser automation platform (e.g. for taking screenshots), which, of course, also includes the purpose of web crawling and web scraping. Rarely anything is better in "talking" to a website than a real, proper browser, right?

Selenium provides a wide range of ways to interact with sites, such as:

- Clicking buttons

- Populating forms with data

- Scrolling the page

- Taking screenshots

- Executing your own, custom JavaScript code

But the strongest argument in its favor is the ability to handle sites in a natural way, just as any browser will. This particularly comes to shine with JavaScript-heavy Single-Page Application sites. If you scraped such a site with the traditional combination of HTTP client and HTML parser, you'd mostly have lots of JavaScript files, but not so much data to scrape.

Installation

While Selenium supports a number of browser engines, we will use Chrome for the following example, so please make sure you have the following packages installed:

- Chrome download page

- A ChromeDriver binary matching your Chrome version

- The Selenium Python Binding package

To install the Selenium package, as always, I recommend that you create a virtual environment (for example using virtualenv) and then:

pip install selenium

Quickstart

Once you have downloaded, both, Chrome and ChromeDriver and installed the Selenium package, you should be ready to start the browser:

from selenium import webdriver

DRIVER_PATH = '/path/to/chromedriver'

driver = webdriver.Chrome(executable_path=DRIVER_PATH)

driver.get('https://google.com')

As we did not explicitly configure headless mode, this will actually display a regular Chrome window, with an additional alert message on top, saying that Chrome is being controlled by Selenium.

Chrome Headless Mode

Running the browser from Selenium the way we just did is particularly helpful during development. It allows you observe exactly what's going on and how the page and the browser is behaving in the context of your code. Once you are happy with everything, it is generally advisable, however, to switch to said headless mode in production.

In that mode, Selenium will start Chrome in the "background" without any visual output or windows. Imagine a production server, running a couple of Chrome instances at the same time with all their windows open. Well, servers generally tend to be neglected when it comes to how "attentive" people are towards their UIs - poor things ☹️ - but seriously, there's no point in wasting GUI resources for no reason.

Fortunately, enabling headless mode only takes a few flags.

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

options = Options()

options.headless = True

options.add_argument("--window-size=1920,1200")

driver = webdriver.Chrome(options=options, executable_path=DRIVER_PATH)

We only need to instantiate an Options object, set its headless field to True, and pass it to our WebDriver constructor. Done.

WebDriver Page Properties

Building on our headless mode example, let's go full Mario and check out Nintendo's website.

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

options = Options()

options.headless = True

options.add_argument("--window-size=1920,1200")

driver = webdriver.Chrome(options=options, executable_path=DRIVER_PATH)

driver.get("https://www.nintendo.com/")

print(driver.page_source)

driver.quit()

When you run that script, you'll get a couple of browser related debug messages and eventually the HTML code of nintendo.com. That's because of our print call accessing the driver's page_source field, which contains the very HTML document of the site we last requested.

Two other interesting WebDriver fields are:

driver.title, to get the page's titledriver.current_url, to get the current URL (this can be useful when there are redirections on the website and you need the final URL)

A full list of properties can be found in WebDriver's documentation.

Locating Elements

In order to scrape/extract data, you first need to know where that data is. For that reason, locating website elements is one of the very key features of web scraping. Naturally, Selenium comes with that out-of-the-box (e.g. test cases need to make sure that a specific element is present/absent on the page).

There are quite a few standard ways how one can find a specific element on a page. For example, you could

- search by the name of the tag

- filter for a specific HTML class or HTML ID

- or use CSS selectors or XPath expressions

Particularly for XPath expression, I'd highly recommend to check out our article on how XPath expressions can help you filter the DOM tree. If you are not yet fully familiar with it, it really provides a very good first introduction to XPath expressions and how to use them.

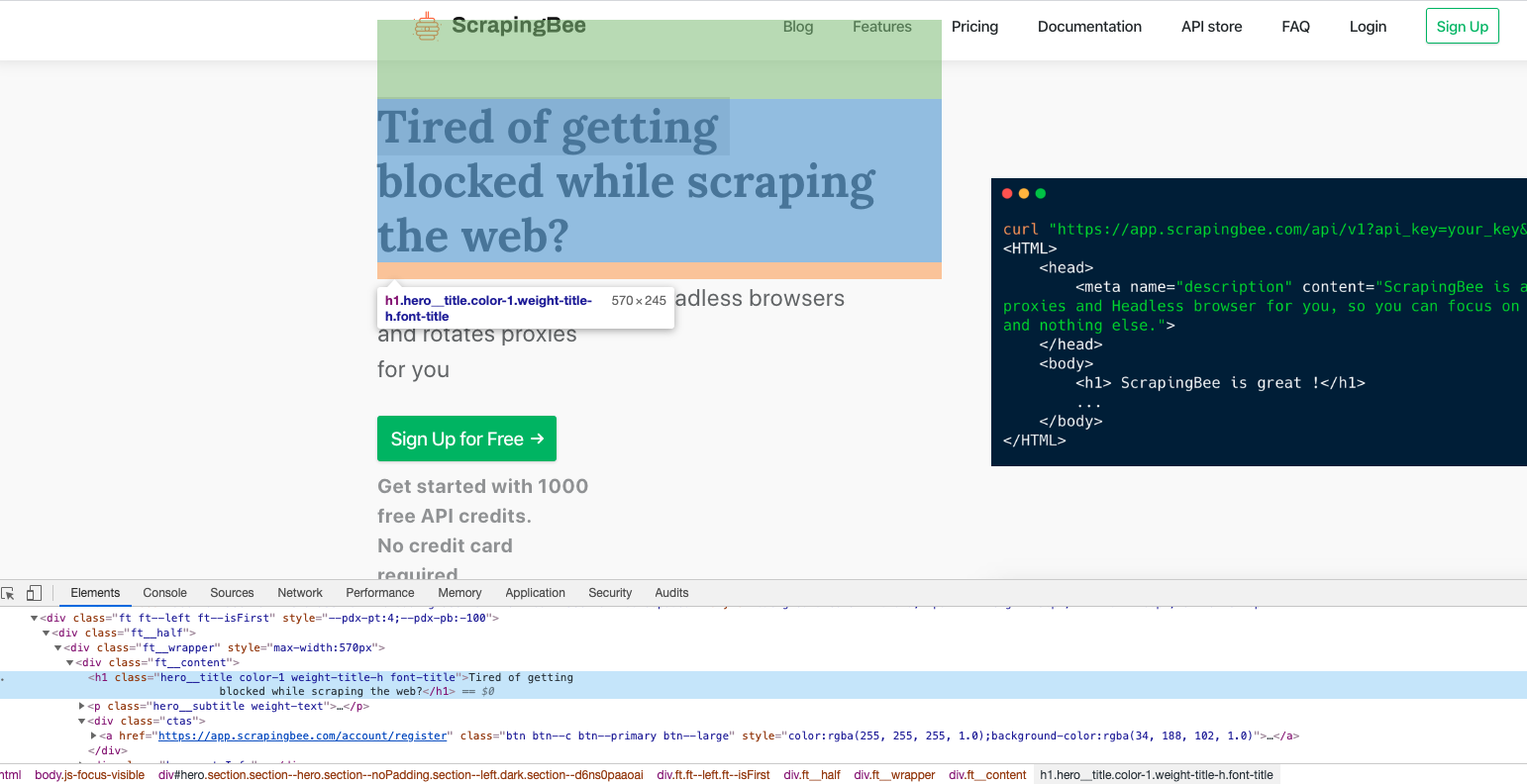

As usual, the easiest way to locate an element is to open your Chrome dev tools and inspect the element that you need. A cool shortcut for this is to highlight the element you want with your mouse and then press Ctrl + Shift + C or on macOS Cmd + Shift + C instead of having to right click and choose Inspect every time.

Once you have found the element in the DOM tree, you can establish what the best method is, to programmatically address the element. For example, you can right click the element in the inspector and copy its absolute XPath expression or CSS selector.

The find_element methods

WebDriver provides two main methods for finding elements.

find_elementfind_elements

They are pretty similar, with the difference that the former looks for one single element, which it returns, whereas the latter will return a list of all found elements.

Both methods support eight different search types, indicated with the By class.

| Type | Description | DOM Sample | Example |

|---|---|---|---|

By.ID |

Searches for elements based on their HTML ID | <div id="myID"> |

find_element(By.ID, "myID") |

By.NAME |

Searches for elements based on their name attribute | <input name="myNAME"> |

find_element(By.NAME, "myNAME") |

By.XPATH |

Searches for elements based on an XPath expression | <span>My <a>Link</a></span> |

find_element(By.XPATH, "//span/a") |

By.LINK_TEXT |

Searches for anchor elements based on a match of their text content | <a>My Link</a> |

find_element(By.LINK_TEXT, "My Link") |

By.PARTIAL_LINK_TEXT |

Searches for anchor elements based on a sub-string match of their text content | <a>My Link</a> |

find_element(By.PARTIAL_LINK_TEXT, "Link") |

By.TAG_NAME |

Searches for elements based on their tag name | <h1> |

find_element(By.TAG_NAME, "h1") |

By.CLASS_NAME |

Searches for elements based on their HTML classes | <div class="myCLASS"> |

find_element(By.CLASSNAME, "myCLASS") |

By.CSS_SELECTOR |

Searches for elements based on a CSS selector | <span>My <a>Link</a></span> |

find_element(By.CSS_SELECTOR, "span > a") |

A full description of the methods can be found here.

find_element examples

Let's say, we have the following HTML document ....

<html>

<head>

... some stuff

</head>

<body>

<h1 class="someclass" id="greatID">Super title</h1>

</body>

</html>

.... and we want to select the <h1> element. Here, the following five examples would be identical in what they return

h1 = driver.find_element(By.NAME, 'h1')

h1 = driver.find_element(By.CLASS_NAME, 'someclass')

h1 = driver.find_element(By.XPATH, '//h1')

h1 = driver.find_element(By.XPATH, '/html/body/h1')

h1 = driver.find_element(By.ID, 'greatID')

Another example could be, to select all anchor/link tags in page. As we want more than one element, we'd be using find_elements here (please do note the plural)

all_links = driver.find_elements(By.TAG_NAME, 'a')

Some elements aren't easily accessible with an ID or a simple class, and that's when you need an XPath expression. You also might have multiple elements with the same class and sometimes even ID, even though the latter is supposed to be unique.

XPath is my favorite way of locating elements on a web page. It's a powerful way to extract any element on a page, based on its absolute position in the DOM, or relative to another element.

Selenium WebElement

A WebElement is a Selenium object representing an HTML element.

There are many actions that you can perform on those objects, here are the most useful:

- Accessing the text of the element with the property

element.text - Clicking the element with

element.click() - Accessing an attribute with

element.get_attribute('class') - Sending text to an input with

element.send_keys('mypassword')

There are some other interesting methods like is_displayed(). This returns True if an element is visible to the user and can prove useful to avoid honeypots (e.g. deliberately hidden input elements). Honeypots are mechanisms used by website owners to detect bots. For example, if an HTML input has the attribute type=hidden like this:

<input type="hidden" id="custId" name="custId" value="">

This input value is supposed to be blank. If a bot is visiting a page and believe it needs to populate all input elements with values, it will also fill the hidden input. A legitimate user would never provide a value to that hidden field, because it is not displayed by the browser in the first place.

That's a classic honeypot.

Full example

Here is a full example using the Selenium API methods we just covered.

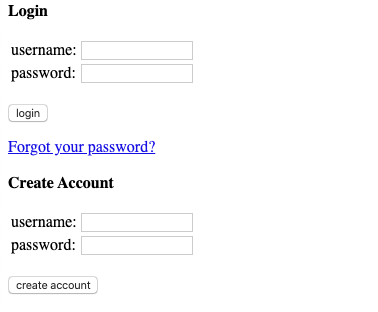

We are going to log into Hacker News:

Of course, authenticating to Hacker News is not really useful on its own. However, you could imagine creating a bot to automatically post a link to your latest blog post.

In order to authenticate we need to:

- Go to the login page using

driver.get() - Select the username input field using

driver.find_elementand then callelement.send_keys()to send text to the field - Follow the same process with the password input field

- Select the login button (

find_element, of course) and click it usingelement.click()

Should be easy right? Let's see the code:

driver.get("https://news.ycombinator.com/login")

login = driver.find_element_by_xpath("//input").send_keys(USERNAME)

password = driver.find_element_by_xpath("//input[@type='password']").send_keys(PASSWORD)

submit = driver.find_element_by_xpath("//input[@value='login']").click()

Easy, right? Now there is one important thing that is missing here. How do we know if we are logged in?

We could try a couple of things:

- Check for an error message (like "Wrong password")

- Check for one element on the page that is only displayed once logged in.

So, we're going to check for the logout button. The logout button has the ID logout (easy)!

We can't just check if the element is None because find_element raises an exception, if the element is not found in the DOM. So we have to use a try/except block and catch the NoSuchElementException exception:

# dont forget from selenium.common.exceptions import NoSuchElementException

try:

logout_button = driver.find_element_by_id("logout")

print('Successfully logged in')

except NoSuchElementException:

print('Incorrect login/password')

Brilliant, works.

Taking screenshots

The beauty of browser approaches, like Selenium, is that we do not only get the data and the DOM tree, but that - being a browser - it also properly and fully renders the whole page. This, of course, also allows for screenshots and Selenium comes fully prepared here.

driver.save_screenshot('screenshot.png')

One single call and we have a screenshot of our page. Now, if that's not cool!

Please, do note, a few things can still go wrong or need tweaking, when you take a screenshot with Selenium. First, you have to make sure that the window size is set correctly. Then, you need to make sure that every asynchronous HTTP call made by the frontend JavaScript code has finished, and that the page is fully rendered.

In our Hacker News case it's simple and we don't have to worry about these issues.

💡 Did you know, ScrapingBee offers a dedicated screenshot API? That is particularly convenient, when you want to take screenshots at scale. Nothing cosier than sending your screenshot requests to the API and sit back and enjoy a hot cocoa ☕.

Waiting for an element to be present

Dealing with a website that uses lots of JavaScript to render its content can be tricky. These days, more and more sites are using frameworks like Angular, React and Vue.js for their front-end. These front-end frameworks are complicated to deal with because they don't just serve HTML code, but you have a rather complex set of JavaScript code involved, which changes the DOM tree on-the-fly and sends lots of information asynchronously in the background via AJAX.

That means, we can't just send a request and immediately scrape the data, but we may have to wait until JavaScript completed its work. There are typically two ways to approach that:

- Use

time.sleep()before taking the screenshot. - Employ a

WebDriverWaitobject.

If you use a time.sleep() you will have to use the most reasonable delay for your use case. The problem is, you're either waiting too long or not long enough and neither is ideal. Also, the site may load slower on your residential ISP connection than when your code is running in production in a datacenter. With WebDriverWait, you don't really have to take that into account. It will wait only as long as necessary until the desired element shows up (or it hits a timeout).

try:

element = WebDriverWait(driver, 5).until(

EC.presence_of_element_located((By.ID, "mySuperId"))

)

finally:

driver.quit()

This will wait until the element with the HTML ID mySuperId appears, or the timeout of five seconds has been reached. There are quite a few other Excepted Conditions types:

alert_is_presentelement_to_be_clickabletext_to_be_present_in_elementvisibility_of

A full list of Waits and its Expected Conditions can be, of course, found in the documentation of Selenium.

But, having a full browser engine at our disposal, does not only mean we can, more or less, easily handle JavaScript code run by the website, it also means we have the ability to run our very own, custom JavaScript. Let's check that out next.

Executing JavaScript

Just as with screenshots, we can equally make full use of our browser's JavaScript engine. That means, we can inject and execute arbitrary code and run it in the site's context. You want to take a screenshot of a part located a bit down the page? Easy, window.scrollBy() and execute_script() got you covered.

javaScript = "window.scrollBy(0, 1000);"

driver.execute_script(javaScript)

Or you want to highlight all anchor tags with a border? Piece of cake 🍰

javaScript = "document.querySelectorAll('a').forEach(e => e.style.border='red 2px solid')"

driver.execute_script(javaScript)

An additional perk of execute_script() is, it returns the value of the expression you passed. In short, the following code will pass our document's title straight to our title variable.

title = driver.execute_script('return document.title')

Not bad, is it?

Being able to return the value,

execute_script()is synchronous in nature. If you do not need to wait for a value, you can also use its asynchronous counterpartexecute_async_script(), of course.

Using a proxy with Selenium Wire

Unfortunately, Selenium proxy handling is quite basic. For example, it can't handle proxy authentication out of the box.

To solve this issue, you can use Selenium Wire. This package extends Selenium's bindings and gives you access to all the underlying requests made by the browser. If you need to use Selenium with a proxy with authentication this is the package you need.

pip install selenium-wire

This code snippet shows you how to quickly use your headless browser behind a proxy.

# Install the Python selenium-wire library:

# pip install selenium-wire

from seleniumwire import webdriver

proxy_username = "USER_NAME"

proxy_password = "PASSWORD"

proxy_url = "http://proxy.scrapingbee.com"

proxy_port = 8886

options = {

"proxy": {

"http": f"http://{proxy_username}:{proxy_password}@{proxy_url}:{proxy_port}",

"verify_ssl": False,

},

}

URL = "https://httpbin.org/headers?json"

driver = webdriver.Chrome(

executable_path="YOUR-CHROME-EXECUTABLE-PATH",

seleniumwire_options=options,

)

driver.get(URL)

Blocking images and JavaScript

Having the entire set of standard browser features at our fingertips, really brings scraping to the next level. We have fully rendered pages, which allows us to take screenshots, the site's JavaScript is properly execute in the right context, and more.

Still, at times, we actually do not need all these features. For example, if we do not take screenshots, there's little point in downloading necessarily all images. Fortunately, Selenium and WebDriver got us covered here as well.

Do you remember the Options class from before? That class also accepts a preferences object, where can enable and disable features individually. For example, if we wanted to disable the loading of images and the execution of JavaScript code, we'd be using the following options:

from selenium import webdriver

chrome_options = webdriver.ChromeOptions()

### This blocks images and javascript requests

chrome_prefs = {

"profile.default_content_setting_values": {

"images": 2,

"javascript": 2,

}

}

chrome_options.experimental_options["prefs"] = chrome_prefs

###

driver = webdriver.Chrome(

executable_path="YOUR-CHROME-EXECUTABLE-PATH",

chrome_options=chrome_options,

)

driver.get(URL)

💡 Love Selenium? Check out our guide to rSelenium which helps you web scrape in R.

Conclusion

I hope you enjoyed this blog post! You should now have a good understanding of how the Selenium API works in Python. If you want to know more about how to scrape the web with Python don't hesitate to take a look at our general Python web scraping guide.

Another interesting read is our guide to Puppeteer with Python. Puppeteer is an API to control Chrome, it's quite more powerful than Selenium (it's maintained directly by the Google team).

Selenium is often necessary to extract data from websites using lots of JavaScript. The problem is that running lots of Selenium/Headless Chrome instances at scale is hard. This is one of the things we solve with ScrapingBee, our web scraping API. Our API is a SaaS-scraping platform, which enables to easily scale your crawling jobs but also knows how to handle out of the box other scraping related topics, such as proxy and connection management and request throttling.

Selenium is also an excellent tool to automate almost anything on the web.

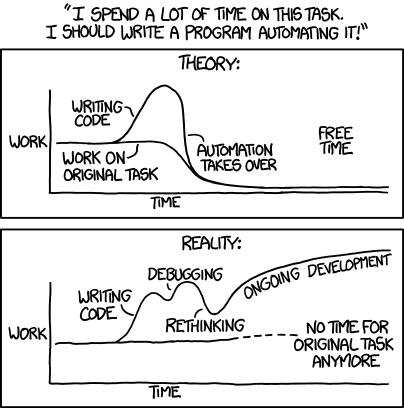

If you perform repetitive tasks, like filling forms or checking information behind a login form where the website doesn't have an API, it's maybe a good idea to automate it with Selenium, just don't forget this xkcd comic:

Kevin worked in the web scraping industry for 10 years before co-founding ScrapingBee. He is also the author of the Java Web Scraping Handbook.