Over 7.59 million active websites use Cloudflare. There's a high chance that the website you intend to scrape might be protected by it. Websites protected by services like Cloudflare can be challenging to scrape due to the various anti-bot measures they implement. If you've tried scraping such websites, you're likely already aware of the difficulty in bypassing Cloudflare's bot detection system.

In this article, you’ll learn how to use Cloudscraper, an open-source Python library, to scrape Cloudflare-protected websites. You’ll learn about some of the advanced features of Cloudscraper, such as CAPTCHA bypass and user-agent manipulation. Furthermore, we’ll discuss the limitations of Cloudscraper and suggest the most effective alternative method.

Without further ado, let’s get started!

TL;DR Cloudscraper Python example code

If you're in a hurry, here's the code we'll write in this tutorial. We'll scrape some useful data from the Cloudflare-protected website ( namecheap.com ). We'll scrape the domain names and their prices.

If your target website is protected by Captcha, remember to swap out "YOUR_2CAPTCHA_API_KEY" with your actual 2Captcha API key. First, sign up on 2Captcha to get an API key (which is a paid service). If your target website isn't Captcha protected, just comment out the "captcha" variable.

import cloudscraper

from bs4 import BeautifulSoup

import json

def fetch_html_content(url):

"""

Fetches HTML content from the specified URL using CloudScraper.

Args:

url (str): The URL to fetch HTML content from.

Returns:

str: The HTML content of the webpage, or None if an error occurs.

"""

try:

# Create a CloudScraper instance to bypass bot protection

scraper = cloudscraper.create_scraper(

interpreter="nodejs",

delay=10,

browser={

"browser": "chrome",

"platform": "ios",

"desktop": False,

},

captcha={

"provider": "2captcha",

"api_key": "YOUR_2CAPTCHA_API",

},

)

# Send a GET request to the URL

response = scraper.get(url)

# Check if the request was successful

if response.status_code == 200:

# Return the HTML content of the webpage

return response.text

else:

# Print an error message if the request fails

print(f"Failed to fetch URL: {

url}. Status code: {response.status_code}")

return None

except Exception as e:

# Print an error message if an exception occurs during the request

print(f"An error occurred while fetching URL: {url}. Error: {str(e)}")

return None

def extract_domain_prices(html_content):

"""

Extracts domain names and their prices from HTML content.

Args:

html_content (str): The HTML content of the webpage.

Returns:

dict: A dictionary containing domain names as keys and their prices as values.

"""

try:

# Create a BeautifulSoup object to parse HTML content

soup = BeautifulSoup(html_content, "html.parser")

# Initialize an empty dictionary to store domain names and prices

domain_prices = {}

# Find all product card elements on the webpage

product_cards = soup.find_all(class_="nchp-product-card")

# Iterate over each product card

for card in product_cards:

# Find the element containing the domain name

domain_name_elem = card.find(class_="nchp-product-card__name")

# Extract the text of the domain name element and remove leading/trailing whitespace

domain_name = domain_name_elem.text.strip() if domain_name_elem else None

# Find the element containing the price information

price_elem = card.find(class_="nchp-product-card__price-text")

# Extract the text of the price element and remove leading/trailing whitespace

price_text = price_elem.text.strip() if price_elem else None

# Check if the domain name starts with a dot

if domain_name and domain_name.startswith("."):

# Add the domain name and its corresponding price to the dictionary

domain_prices[domain_name] = price_text

# Return the dictionary containing domain names and prices

return domain_prices

except Exception as e:

# Print an error message if an exception occurs during the extraction process

print(

f"An error occurred while extracting domain prices. Error: {str(e)}")

# Return an empty dictionary to indicate that no domain prices were extracted

return {}

if __name__ == "__main__":

# Define the URL of the webpage to scrape

url = "https://www.namecheap.com/"

# Fetch HTML content from the specified URL

html_content = fetch_html_content(url)

# Check if HTML content was successfully retrieved

if html_content:

# Extract domain prices from the HTML content

domain_prices = extract_domain_prices(html_content)

# Check if domain prices were successfully extracted

if domain_prices:

# Save domain prices to a JSON file

with open("domain_prices.json", "w") as json_file:

json.dump(domain_prices, json_file, indent=4)

print("Domain prices have been saved to domain_prices.json.")

else:

# Print a message if no domain prices were extracted

print("No domain prices were extracted.")

else:

# Print a message if HTML content retrieval failed

print("Failed to retrieve HTML content. Please check your internet connection or try again later.")

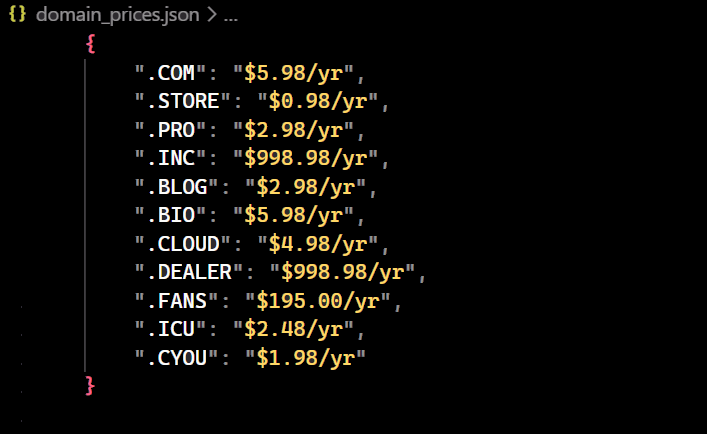

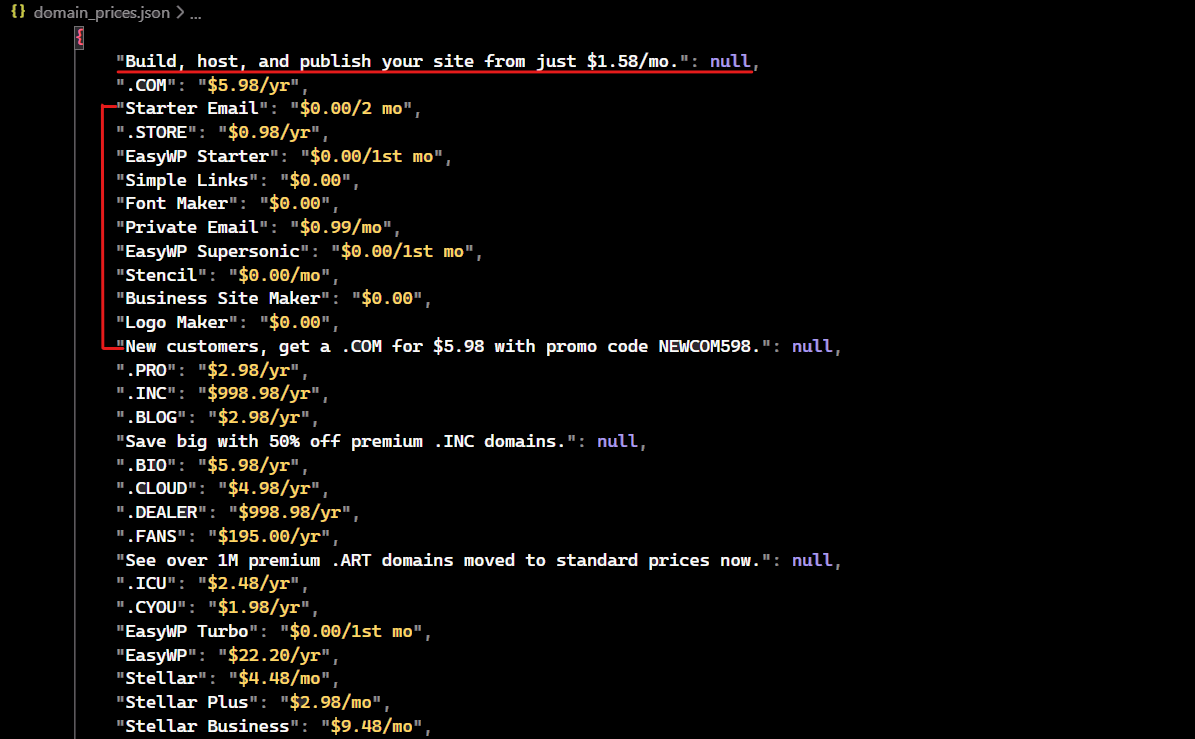

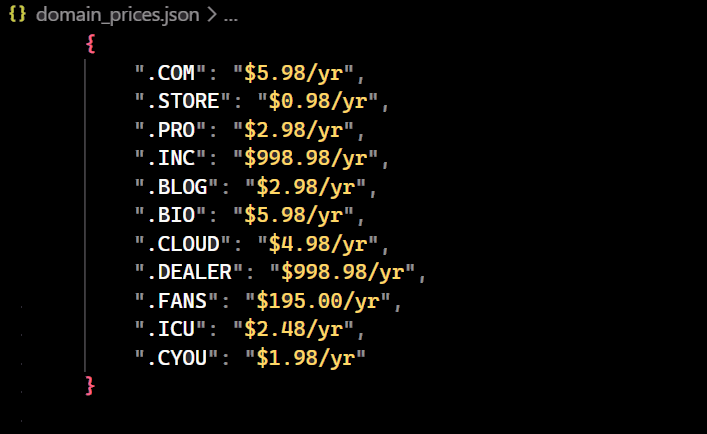

The result is:

What Is Cloudscraper and How Does It Work

Cloudscraper is a Python library implemented with the Requests library, designed to bypass Cloudflare's anti-bot challenges. It is specifically created to scrape data from websites protected by Cloudflare.

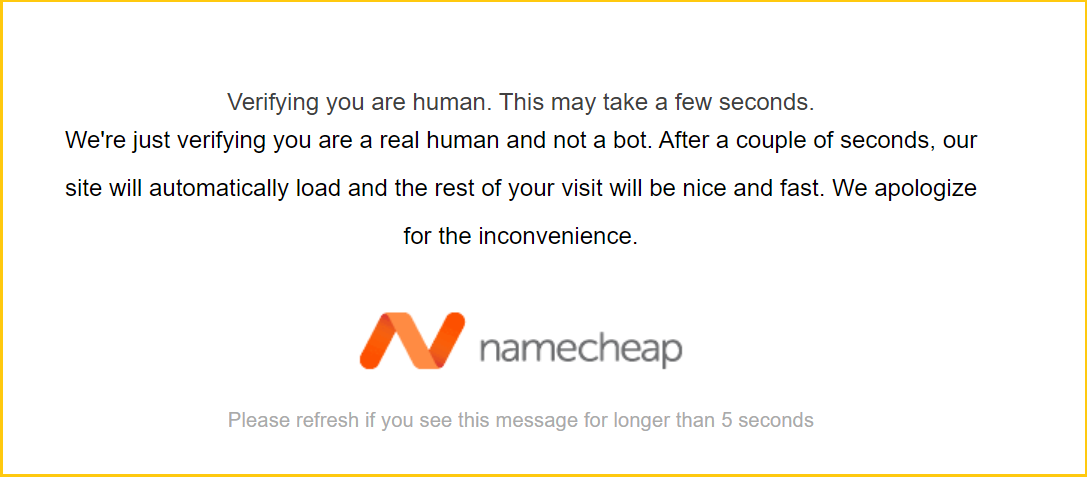

When you frequently request websites protected by Cloudflare, the service may suspect you're a bot and present a challenge to verify you're a real user. This process can take up to 5 seconds. If you fail the challenge, you'll be blocked from accessing the website.

Cloudflare uses various browser fingerprinting challenges and checks to distinguish between genuine users and scrapers/bots. Cloudscraper circumvents these challenges by mimicking the behavior of a real web browser.

This tool leverages a JavaScript engine/interpreter to overcome JavaScript challenges without requiring explicit deobfuscation and parsing of Cloudflare's JavaScript code.

Note: Cloudscraper is an actively maintained open-source project, which is a rarity for open-source Cloudflare tech. It has more than 3.8k stars on GitHub.

How to Scrape with Cloudscraper

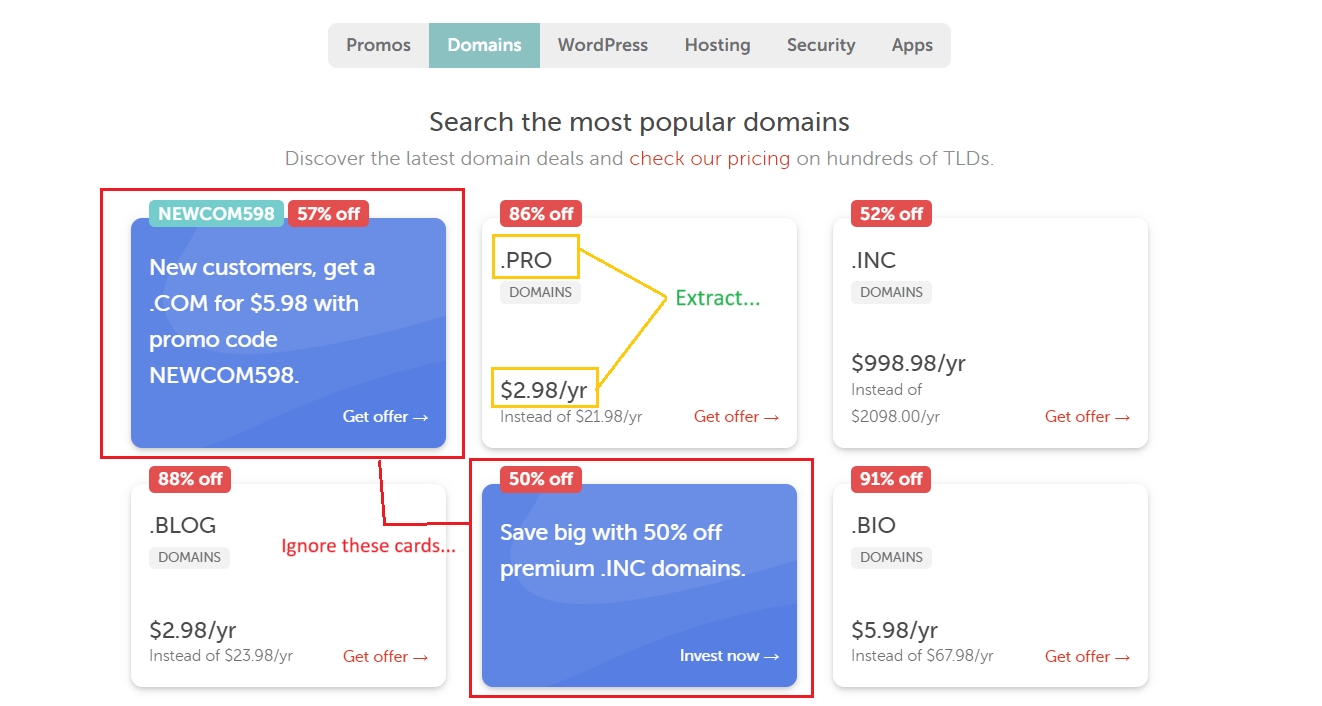

Let's take a step-by-step look at scraping with Python cloudscraper. We'll extract the domain names and prices from the namecheap.com .

While extracting data, you may come across unrelated cards such as those offering information about discounted ‘.COM’ and ‘.INC’ domains. We’ll simply ignore these cards.

Step 1. Prerequisites

Before you start, make sure you meet all the following requirements:

- Python: Ensure you’ve Python installed by downloading the latest version from the official website . For this blog, we’re using Python 3.12.2.

- Code Editor: Choose a code editor, such as Visual Studio Code, PyCharm, or Jupyter Notebook, according to your preference.

- Libraries: Create a virtual environment using virtualenv . Once within the virtual environment, run the following commands in your terminal to install the required libraries.

pip install cloudscraper==1.2.71 beautifulsoup4==4.12.3

Step 2. Define the HTML Fetching Function

Define a function that scrapes a Cloudflare-protected website and fetches the HTML content from the provided URL using the cloudscraper library.

def fetch_html_content(url):

Step 3. Create a Cloudscraper Instance

To bypass bot protection, create a Cloudscraper instance with advanced parameters including browser user agent, captcha handling, and JavaScript interpreter.

- JavaScript Interpreters and Engines: It specifies the JavaScript engine used to execute the anti-bot challenges. In our case, "nodejs" is used.

- Browser/User-Agent Filtering: It controls how and which "User-Agent" is randomly selected. We’re using mobile Chrome User-Agents on iPhone in this example. Others too are available, such as mobile Chrome User-Agents on Android, desktop Chrome User-Agents on Windows, or any custom user agent.

- 3rd-Party Captcha Solvers: Cloudscraper currently supports several third-party captcha solvers: 2captcha , AntiCaptcha , CapSolver , CapMonster Cloud , DeathByCaptcha , and 9kw . This example will use 2captcha as the captcha provider. Make sure you’ve obtained the required API key. Sign up on 2Captcha to get an API key.

- Delay: The Cloudflare IUAM challenge requires the browser to wait for approximately five seconds before submitting the challenge answer. If you want to override this delay, you can do so using the

delayparameter.

Here’s the sample code snippet:

scraper = cloudscraper.create_scraper(

interpreter="nodejs",

delay=10,

browser={

"browser": "chrome",

"platform": "ios",

"desktop": False,

},

captcha={

"provider": "2captcha",

"api_key": "YOUR_2CAPTCHA_API",

},

)

Step 4. Make Request to the Website

To use cloudscraper, you can follow the same steps as you would with Requests. Cloudscraper works just like a Requests Session object. Instead of using requests.get() or requests.post(), you can use scraper.get() or scraper.post().

Once you’ve made the request, review the response status code. If the status code is 200, it means that the website is allowing you to scrape the data.

Next, check the response status code. If it's 200, the site is allowing you to scrape the data.

response = scraper.get(url)

if response.status_code == 200:

return response.text

else:

print(f"Failed to fetch URL: {url}. Status code: {response.status_code}")

return None

Step 5. Extract Domain Names and Prices

This function extracts domain names and their corresponding prices from the provided HTML content.

def extract_domain_prices(html_content):

Step 6. Parsing HTML Content with BeautifulSoup

Create a BeautifulSoup object to parse HTML content. html_content contains the raw HTML content of the page and html.parser specifies the parser to be used.

soup = BeautifulSoup(html_content, "html.parser")

Step 7. Find Listing Elements

The next step is to locate all the product card elements present on the webpage, as these elements hold the information we need to scrape.

product_cards = soup.find_all(class_="nchp-product-card")

Use the find_all() of the BeautifulSoup method to locate all HTML elements with the class name nchp-product-card.

Step 8. Extract Information from Each Listing

To extract the necessary data, you need to iterate through each listing element. For each piece of data, you can use if/else statements to check if the element exists. If it does not exist, assign the string "None" as the value.

for card in product_cards:

Extract Domain Names

First, identify the element using the nchp-product-card__name class. Then, extract and trim the text from the element.

domain_name_elem = card.find(class_="nchp-product-card__name")

domain_name = domain_name_elem.text.strip() if domain_name_elem else None

Extract Domain Prices

You can then use the class nchp-product-card__price-text to find the element containing the price information. Extract the text from the price element and remove leading and trailing whitespace.

price_elem = card.find(class_="nchp-product-card__price-text")

price_text = price_elem.text.strip() if price_elem else None

Step 9. Final Check Condition

We need to check if the domain name starts with a dot. This is important because while extracting data using classes, other data might also be extracted besides domains. Therefore, we’ll run a separate check to extract only the domains.

In the below image, you can see that unnecessary information (like starter email, private email, or font maker) is also fetched. We need to extract only the domain names and prices from this data.

Apply the following check condition to extract only the domain names and their corresponding prices.

if domain_name and domain_name.startswith("."):

domain_prices[domain_name] = price_text

Step 10. Save Data to a JSON File

Once you’ve extracted the data from the listings, save it in a JSON file.

with open("domain_prices.json", "w") as json_file:

json.dump(domain_prices, json_file, indent=4)

Step 11. Run the Scraper

Lastly, call the required functions to run the scraper and store data in a JSON file.

if __name__ == "__main__":

url = "https://www.namecheap.com/"

html_content = fetch_html_content(url)

if html_content:

domain_prices = extract_domain_prices(html_content)

if domain_prices:

# Store data in a JSON file

pass

The final output is:

Drawbacks of Using Cloudscraper

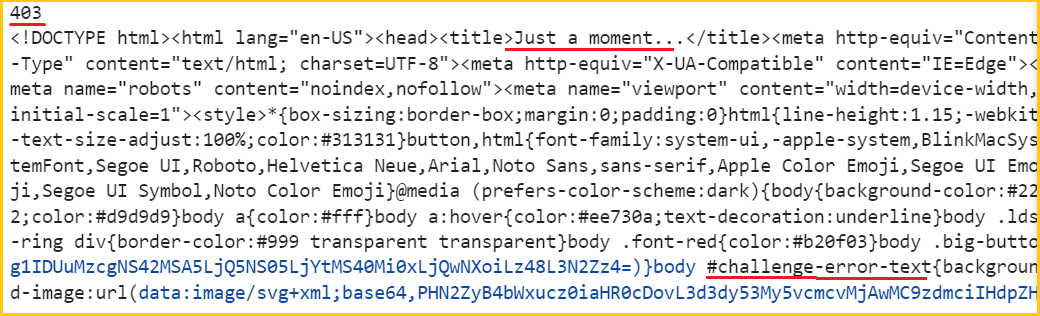

It's important to note that not all Cloudflare-protected websites can be accessed. If you try to access them, you may receive a 403 status code. This is because as Cloudflare updates, open-source solutions like Cloudscraper may become outdated and stop working.

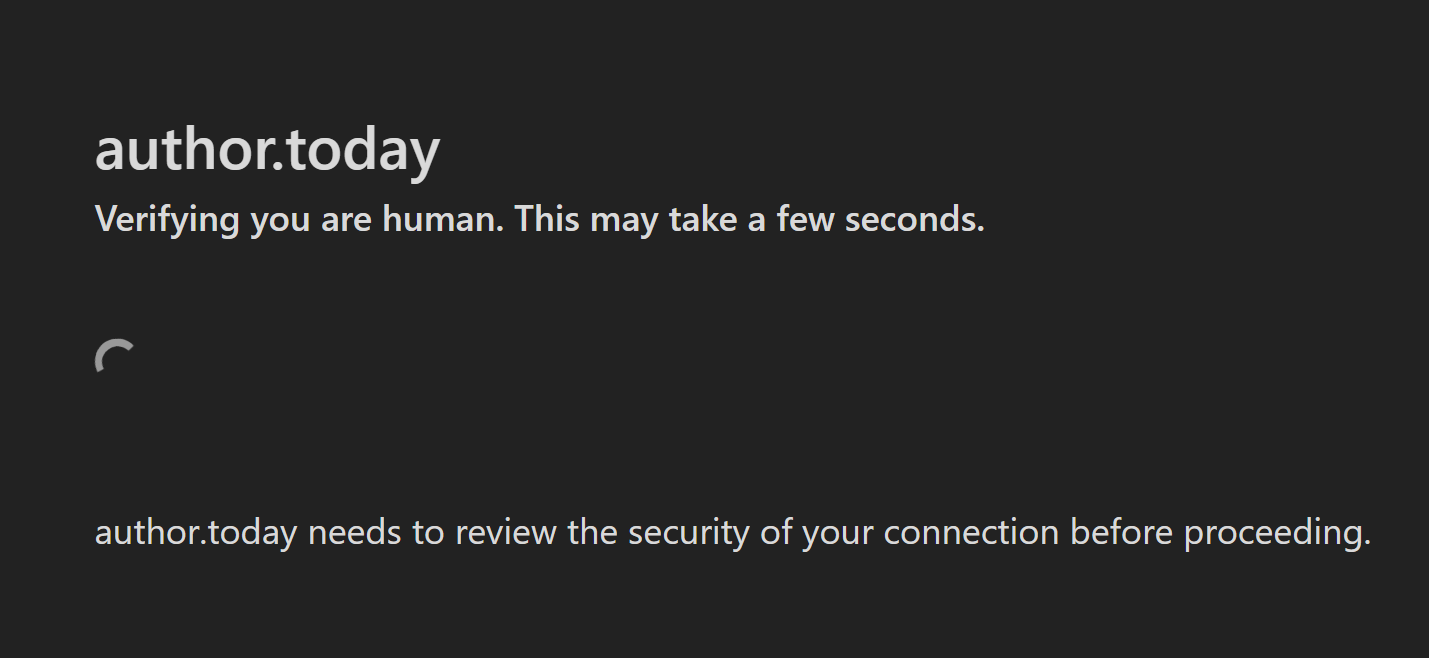

Currently, Cloudscraper cannot scrape websites protected by the newer version of Cloudflare. A significant number of websites are using this updated version. One such example is the Author website.

When visiting the website, we’re automatically redirected to the Cloudflare waiting room for a security check.

Cloudflare accepts our connection and redirects us to the homepage since we’re sending the request from a real browser.

Let's try to access the website with Cloudscraper.

import cloudscraper

url = "https://author.today/"

scraper = cloudscraper.create_scraper()

info = scraper.get(url)

print(info.status_code)

print(info.text)

The output received:

As you can see, Cloudscraper isn't working against newer Cloudflare versions. The error message suggests that the challenge page is blocking access to the website. In the next section, we’ll discuss an alternative to solve this problem.

Alternative to Cloudscraper

If you've been facing issues with newer versions of Cloudflare, it’s time to switch to alternative solutions. ScrapingBee API is a great option to consider, as it takes care of maintaining your code infrastructure and ensures that you stay up-to-date with the latest software updates from Cloudflare.

ScrapingBee offers a fresh pool of proxies that can handle even the most challenging websites. To use this pool, you simply need to add stealth_proxy=True to your API calls. The ScrapingBee Python SDK makes it easier to interact with ScrapingBee's API.

Don't forget to replace "Your_ScrapingBee_API_Key" with your actual API key, which you can retrieve from here .

# Install the Python ScrapingBee library:

# pip install scrapingbee

from scrapingbee import ScrapingBeeClient

client = ScrapingBeeClient(

api_key="Your_ScrapingBee_API_Key"

)

response = client.get(

"https://author.today/",

params={

"stealth_proxy": "True",

},

)

print("Response HTTP Status Code: ", response.status_code)

with open("response.html", "wb") as f:

f.write(response.content)

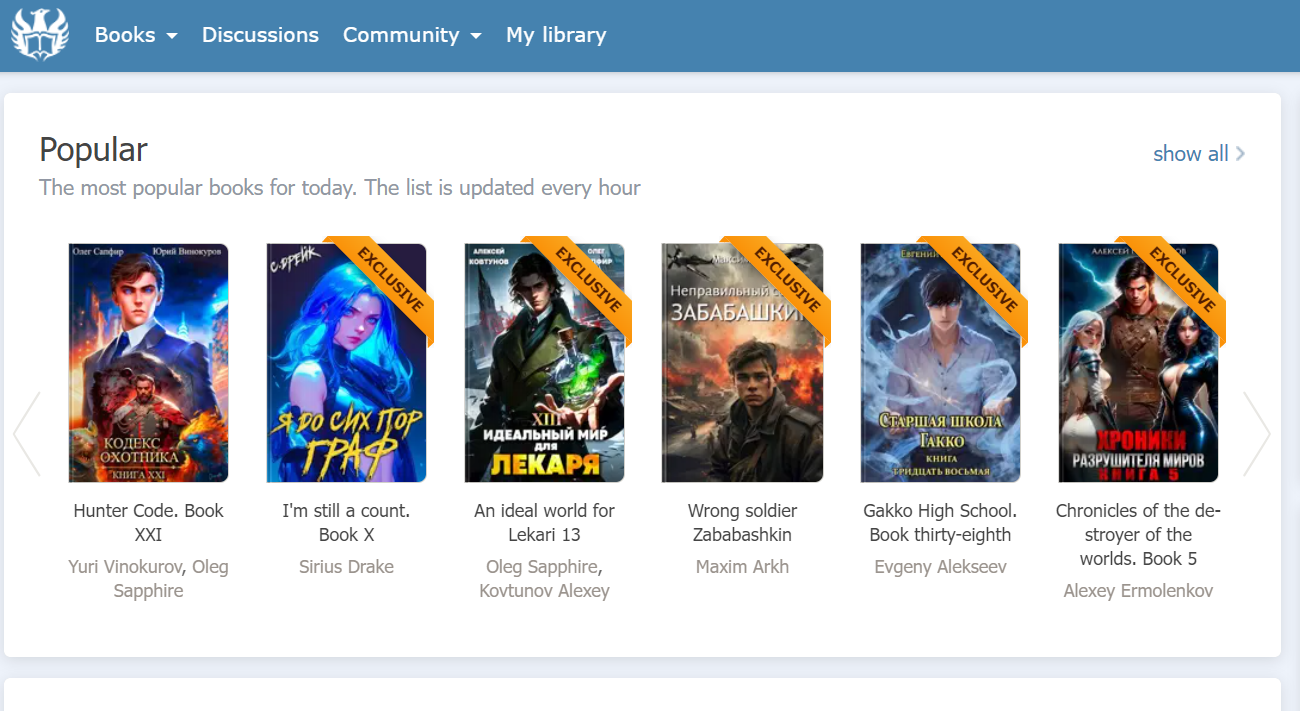

The output is:

We did it!

We’ve successfully fetched HTML text that will be stored in a file named "respnse.html." When you open this file, you’ll see the above image. This shows that we’ve successfully bypassed the Cloudflare-protected website and retrieved its HTML.

Wrapping Up

You've learned how to scrape Cloudflare-protected websites using Cloudscraper, an open-source Python library specifically designed to bypass Cloudflare. You've also learned some of Cloudscraper's advanced functions, such as handling captchas and user agents. Finally, you've explored both the limitations of Cloudscraper and the best alternatives available.