In this article, we will discuss how to efficiently download files with Puppeteer. Automating file downloads can sometimes be complicated. You perhaps need to explicitly specify a download location, download multiple files at the same time, and so on. Unfortunately, all these use cases are not well documented. That’s why I wrote this article to share some of the tips and tricks that I came up with over the years while working with Puppeteer. We will go through several practical examples and take a deep dive into Puppeteer’s APIs used for file download. Exciting! let’s get started.

Downloading an image by simulating button click

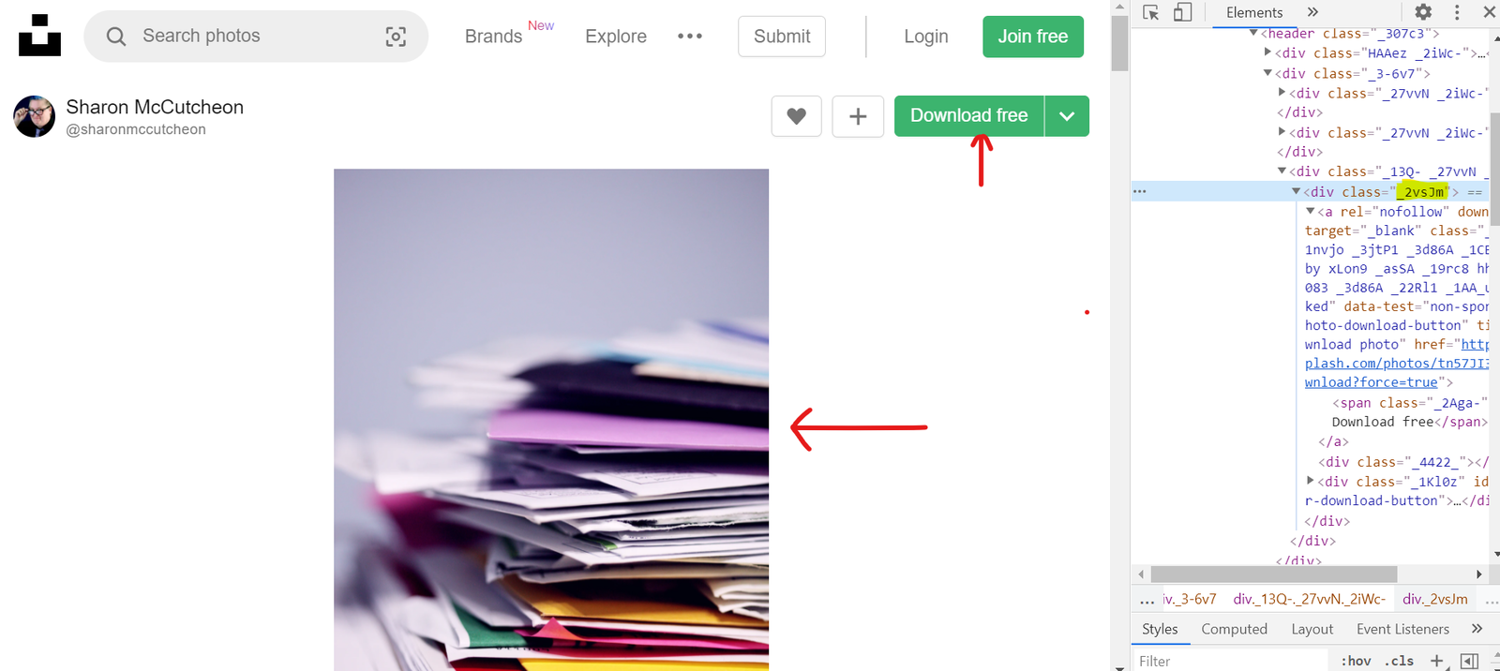

In the first example, we will take a look at a simple scenario where we automate a button click to download an image. We will open up a URL in a new browser tab. Then we will find the download button on the page. Finally, we will click on the download button. Sounds simple right? Here’s the image we are trying to download:

We can use the following script to automate the download process.

const puppeteer = require('puppeteer');

async function simplefileDownload() {

const browser = await puppeteer.launch({

headless: false

});

const page = await browser.newPage();

await page.goto(

'https://unsplash.com/photos/tn57JI3CewI',

{ waitUntil: 'networkidle2' }

);

await page.click('._2vsJm ')

}

simplefileDownload();

I set the headless option to be false (in line 4). This will allow us to observe the automation in real-time. The script itself is pretty self-explanatory. We are creating a new instance of Puppeteer. Then we are opening up a new tab with the given URL. Finally, we are using the click() function to simulate the button click. Let’s run this script.

It works pretty well. However, there is one minor issue. The image is being downloaded in the operating system's default download path.

ℹ️ There is a tight coupling between Chrome and the local file system of our operating system. The download location can vary from OS to OS. If we want our script to subsequently process and remove files, it becomes much harder to do so due to this tight coupling.

We can avoid the default download path by explicitly specifying the path in our script. Let’s update our script to set the path.

const puppeteer = require('puppeteer');

const path = require('path');

const downloadPath = path.resolve('./download');

async function simplefileDownload() {

const browser = await puppeteer.launch({

headless: false

});

const page = await browser.newPage();

await page.goto(

'https://unsplash.com/photos/tn57JI3CewI',

{ waitUntil: 'networkidle2' }

);

await page._client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: downloadPath

});

await page.click('._2vsJm ')

}

simplefileDownload();

We are using the Node’s native path to specify our download path in line 2 and 3. On line 15 we are using the Page.setDownloadBehavior property of Puppeteer to tie up the path to Chrome browser.

Replicating the download request

Next let’s take a look at how we can download files by making an HTTP request. Instead of simulating clicks we are going to find the image source. Here’s how its done

const fs = require('fs');

const https = require('https');

async function downloadWithLinks() {

const browser = await puppeteer.launch({

headless: false

});

const page = await browser.newPage();

await page.goto(

'https://unsplash.com/photos/tn57JI3CewI',

{ waitUntil: 'networkidle2' }

);

const imgUrl = await page.$eval('._2UpQX', img => img.src);

https.get(imgUrl, res => {

const stream = fs.createWriteStream('somepic.png');

res.pipe(stream);

stream.on('finish', () => {

stream.close();

})

})

browser.close()

}

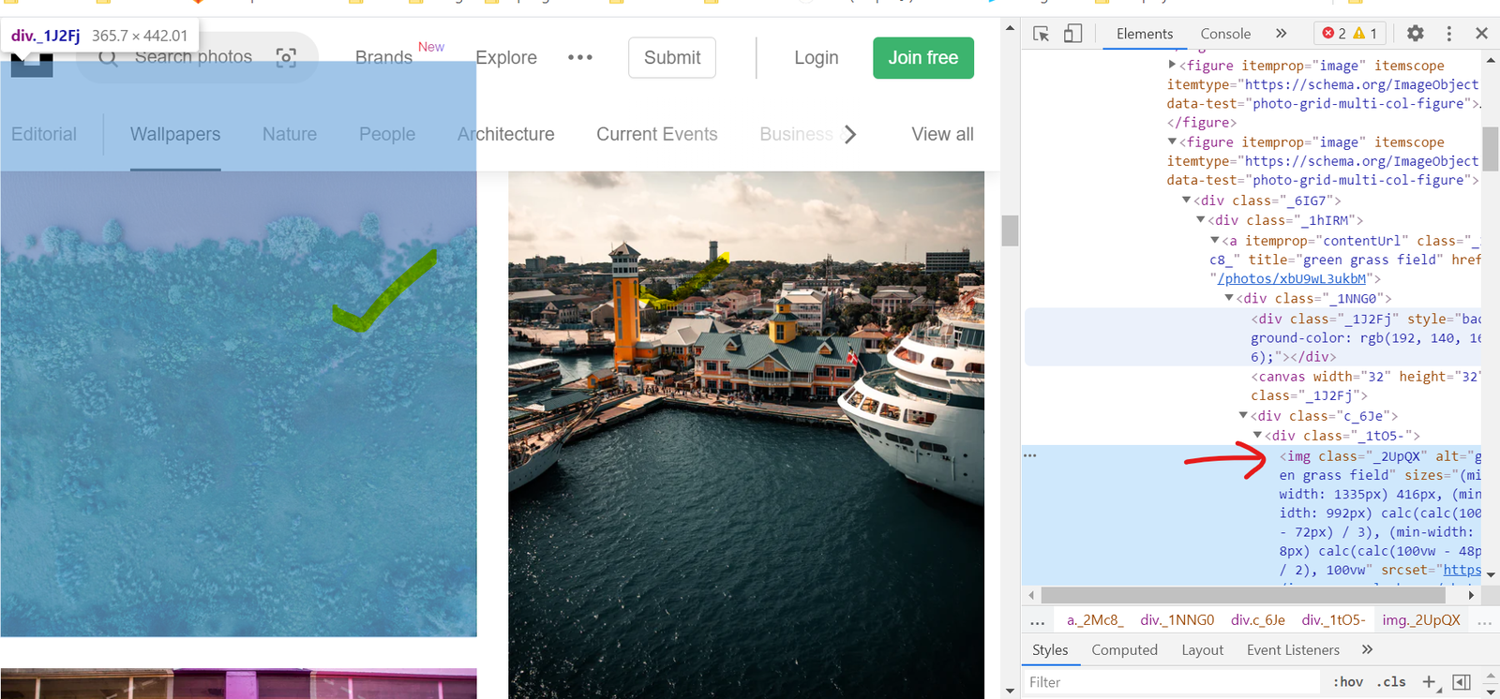

The first portion of this script is nearly identical to our previous example. On line 13 things get interesting. We are finding the image's DOM node directly on the page and getting its src property. The src property is an URL. We are making an HTTPS GET request to this URL and using Node’s native fs module to write that file stream to our local file system. This method can only be used if the file we want to download has its src exposed in the DOM.

Why would you want to use this method?

Why use this method instead of simulating button click as seen in the previous example. Well, first of all, this method can be faster. We can simultaneously download multiple files.

Let’s say a page has a couple of images and we want to download all of them. Clicking one of these images will take the user to a new page and from there, the user can download that image. To download the next image the user has to go back to the previous page. This seems tedious. We can write a script that mimics this behavior but we don’t need to if the first page has the image URL exposed in the DOM.

Let’s dive into an example of this scenario. Let’s say we would like to scrape some images from the following website. Observing the DOM we can see that these images have src properties.

The following script will gather all the image sources and download them.

const puppeteer = require('puppeteer');

const fs = require('fs');

const https = require('https');

async function downloadMultiple() {

const browser = await puppeteer.launch({

headless: false

});

const page = await browser.newPage();

await page.goto(

'https://unsplash.com/t/wallpapers',

{ waitUntil: 'networkidle2' }

);

const imgUrls = await page.$$eval('._2UpQX', imgElms => {

const urls = [];

imgElms.forEach(elm => {

urls.push(elm.src);

})

return urls;

});

imgUrls.forEach((url , index) => {

https.get(url, res => {

const stream = fs.createWriteStream(`download-${index}.png`);

res.pipe(stream);

stream.on('finish', () => {

stream.close();

})

})

});

browser.close()

}

downloadMultiple();

Downloading multiple files in parallel

In this next part, we will dive deep into some of the advanced concepts. We will discuss parallel download. Downloading small files is easy. However, if you have to download multiple large files things start to get complicated. You see Node.js in its core is a single-threaded system. Node has a single event loop. It can only execute one process at a time. Therefore if we have to download 10 files each 1 gigabyte in size and each requiring about 3 mins to download then with a single process we will have to wait for 10 x 3 = 30 minutes for the task to finish. This is not performant at all.

💡 Learn more about the single threaded architecture of node here

So, how do we solve this problem?

The answer is a parallel process. Our CPU cores can run multiple processes at the same time. We can fork multiple child_proces in Node. Child process is how Node.js handles parallel programming. We can combine the child process module with our Puppeteer script and download files in parallel.

💡 If you are not familiar with how child process work in

NodeI highly encourage you to give this article a read.

The code snippet below is a simple example of running parallel downloads with Puppeteer.

// main.js

const fork = require('child_process').fork;

const ls = fork("./child.js");

ls.on('exit', (code)=>{

console.log(`child_process exited with code ${code}`);

});

ls.on('message', (msg) => {

ls.send('https://unsplash.com/photos/AMiglZWQSQQ');

ls.send('https://unsplash.com/photos/TbEqd-GNC5w');

ls.send('https://unsplash.com/photos/FiVujM6egyU');

ls.send('https://unsplash.com/photos/yGBJB6lHYVw');

});

We have two files in this solution the first one is main.js. In this file we initiate our child processes and send the URL of the images we want to download. For each URL a new child thread will be initiated.

// child.js

const puppeteer = require('puppeteer');

const path = require('path');

const downloadPath = path.resolve('./download');

process.on('message', async (url)=> {

console.log("CHILD: url received from parent process", url);

await download(url)

});

process.send('Executed');

async function download(url) {

const browser = await puppeteer.launch({

headless: false

});

const page = await browser.newPage();

await page.goto(

url,

{ waitUntil: 'networkidle2' }

);

// Download logic goes here

await page._client.send('Page.setDownloadBehavior', {

behavior: 'allow',

downloadPath: downloadPath

});

await page.click('._2vsJm ')

//

}

In the child.js file, the main download functionality is implemented. Observe that the download function we have here is identical to our earlier solution. The only difference is the Process.on function. This function receives a message (in our case a link) from the main process and initiates the child process outside of the Node’s main event loop. Our main script simultaneously spawns 4 instances of chrome and initiates file download in parallel.

Final Thoughts

I hope the explanations and code examples shared above gave you a better understanding of how to file download works in Puppeteer. There are many ways you can download files with Puppeteer. Once you have a solid understanding of Puppeteer’s API and how it fits together in the Node.js ecosystem you can come up with custom solutions best suited for you.

Further reading:

- how to submit forms with Puppeteer. This is a very common use case, and it can sometimes be mandatory to submit a form before downloading a file.

- Intercepting requests with Puppeteer

Happy Scraping!

Kevin worked in the web scraping industry for 10 years before co-founding ScrapingBee. He is also the author of the Java Web Scraping Handbook.