If you're looking for the best web scraping tools in 2025, you'll quickly see there are a lot of choices. Some are simple libraries, others are full SaaS platforms. Each promises speed, scale, or AI magic, but not every tool will fit your project.

That's why we put together this ranked list. Below, you'll find the top web scraping tools of 2025, with clear breakdowns of features, pros, cons, and pricing. Whether you want a reliable service like ScrapingBee or a free open-source option, you'll see what works best for your needs.

How to choose a web scraping tool

Picking a scraping platform shouldn't feel like throwing darts blindfolded 🎯. The best tool depends on what your project actually needs.

Key things to consider

- Frequency – is it a one-time job or a regular schedule?

- Data type – HTML, JSON, media, or structured formats?

- Output – CSV, JSON, Excel, or direct to a database/API?

- Volume – small datasets or large-scale crawls?

- Complexity – simple pages or JavaScript-heavy apps?

- Obstacles – CAPTCHAs, rate limits, geo-blocks?

- Expertise – do you want no-code simplicity or full developer control?

Once you've mapped this out, comparing tools gets much easier. For most developers and teams in 2025, API-based services like ScrapingBee hit the sweet spot between ease of use, scalability, and cost.

1. ScrapingBee

When it comes to the best web scraping tools in 2025, ScrapingBee takes the top spot. It's built around a simple Web Scraping API that lets you focus on extracting data instead of wrestling with proxies, browsers, or CAPTCHAs. Developers and agencies like it because it scales without extra setup.

ScrapingBee key features

- API-first design with Python and JavaScript client libraries

- Full headless browser and JavaScript rendering support

- Automatic proxy rotation (datacenter + residential)

- CAPTCHA handling and reCAPTCHA bypassing

- AI-powered scraping using plain English instructions

- Structured JSON output and screenshot capture

ScrapingBee pros and cons

Pros

- Highly reliable and easy to scale

- Handles proxies, headers, and anti-bot systems automatically

- Clean JSON output ready for use

- Great documentation and support

Cons

- Requires basic developer skills to use the API

🔥 Want to try it fast? Check out this Google Colab quick start example. You can also use the new API playground in your account to generate code samples in any major programming language. Sign up for free here.

ScrapingBee pricing

Plans start at $49/month, based on a credit system. You also get a pay-as-you-go option if you don't need a monthly plan. See the full details on the ScrapingBee pricing page.

💡 You can test ScrapingBee completely free with 1,000 API calls. Sign up at https://app.scrapingbee.com/account/register.

ScrapingBee best use case

ScrapingBee is the go-to choice for developers, agencies, and teams that need scalable scraping without the usual headaches. If your project involves JavaScript-heavy websites or strict anti-bot protections, this tool saves you hours of setup and maintenance.

Decodo's Web Scraping API

Decodo (formerly Smartproxy) offers an all-in-one Web Scraping API that takes care of proxies, browsers, CAPTCHAs, and retries so you can focus on data.

Decodo key features

- Two modes: Core (fast, structured data) and Advanced (JavaScript rendering and templates)

- Pre-built scraping templates for Amazon, Google, TikTok, Airbnb, and more

- Automatic proxy and IP rotation

- Built-in CAPTCHA bypass and retry logic

- Task scheduling and automation options

- Flexible output formats: JSON, HTML, CSV

Decodo pros and cons

Pros

- AI-enhanced scraping adapts to site changes

- Ready-made templates save setup time

- Huge proxy pool with global coverage

- Handles JavaScript-heavy sites and CAPTCHAs

- Multiple output formats and API Playground for testing

Cons

- Costs scale quickly for higher request volumes

- Some advanced features require higher tiers or technical know-how

- Core vs Advanced setup can be confusing for beginners

Decodo pricing

Pricing is tiered by volume: the more requests you commit to, the lower the per-1,000-request cost becomes. At low volumes it's relatively expensive; at scale it can reach ~$0.08 per 1,000 requests. A 7-day free trial with 1,000 requests is usually included.

Decodo best use case

Decodo works well for marketing, SEO, or analytics teams that want reliable scraping without managing proxies or infrastructure. It's a mid-tier choice when you need flexibility and decent scale, but aren't yet playing at massive enterprise volume.

3. ScraperAPI

ScraperAPI is a plug-and-play web scraping API. You pass a URL, and it handles proxies, CAPTCHAs, and rendering in the background.

ScraperAPI key features

- Automatic proxy rotation (residential and datacenter)

- JavaScript rendering for dynamic sites

- Built-in CAPTCHA and anti-bot handling

- Prebuilt endpoints for Amazon, SERPs, and other common targets

- SDKs for multiple languages (Python, JavaScript, etc.)

ScraperAPI pros and cons

Pros

- Easy to integrate with minimal setup

- Good support for structured data and domain-specific endpoints

- Handles anti-bot measures automatically

Cons

- Costs scale quickly as volume grows

- Some "harder" requests (JS, complex sites) may consume more credits

- Less granular control compared to building your own custom scraper

ScraperAPI pricing

ScraperAPI pricing starts at $49/month with credits included. As you move to higher tiers, you get more credits and concurrency. They also offer a free trial and a small free-credit tier for light usage.

ScraperAPI best use case

ScraperAPI is best for teams who want a straightforward API to scrape many pages quickly, without dealing with proxy management. It's a strong option for SEO data collection, e-commerce monitoring, or dashboard projects.

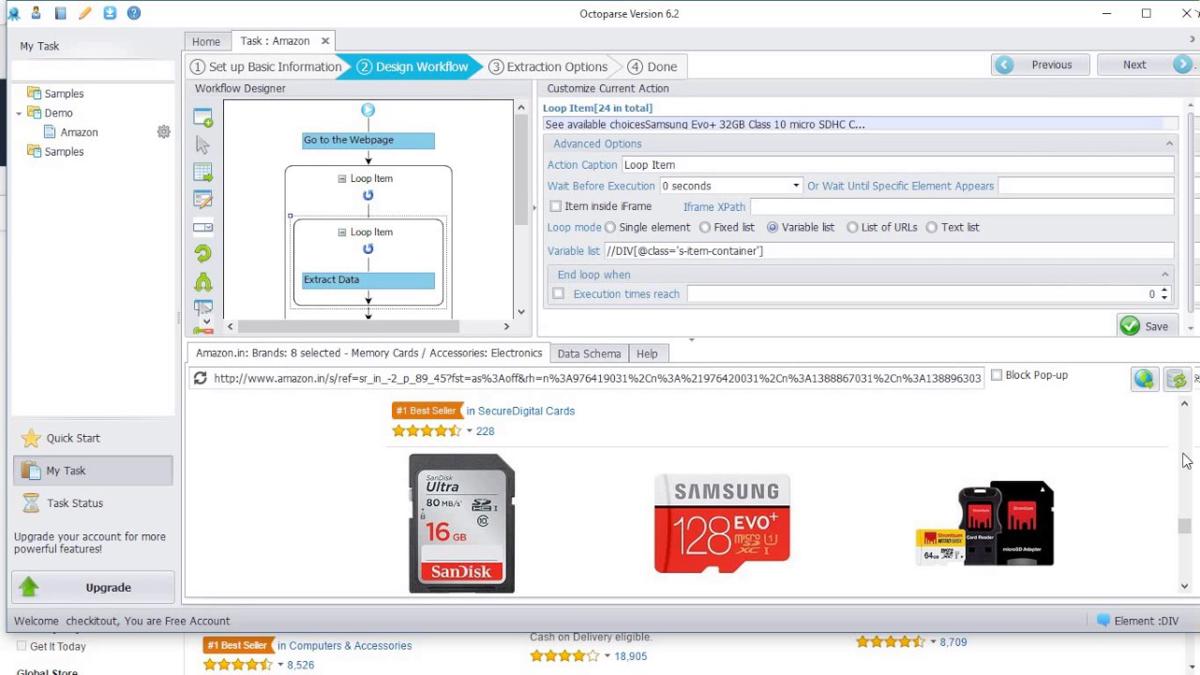

4. Octoparse

Octoparse is a no-code web scraping tool that lets you build scrapers visually. It's aimed at users who want data without writing scripts.

Octoparse key features

- Visual point-and-click interface and auto-detect mode

- Cloud extraction with scheduling

- Proxy / IP rotation and CAPTCHA solving

- Hundreds of preset templates for popular sites

- API access, local + cloud modes

- Data export: JSON, CSV, Excel, etc.

- Support for infinite scroll, AJAX, login flows

Octoparse pros and cons

Pros

- Ideal for non-developers: easy to build scrapers visually

- Templates speed up setup for many sites

- Cloud mode removes need for local infrastructure

- Handles common anti-bot hurdles

Cons

- Struggles on very complex or highly protected sites

- Pricing jumps up with usage and features

- Less flexibility compared to code-based frameworks

Octoparse pricing

Octoparse has a free tier for small jobs. Paid plans now start around $83/month for the Standard plan, with higher tiers climbing toward $249–$299/month. Add-ons like residential proxies, CAPTCHA solving, or pay-per-use templates are billed separately.

Octoparse best use case

Octoparse works well when you need to extract data but don't want to code. Think marketers, analysts, small businesses, or anyone who wants to get results fast. For scrapes that require deep anti-bot evasion or enterprise scale, you might lean toward API-centric tools.

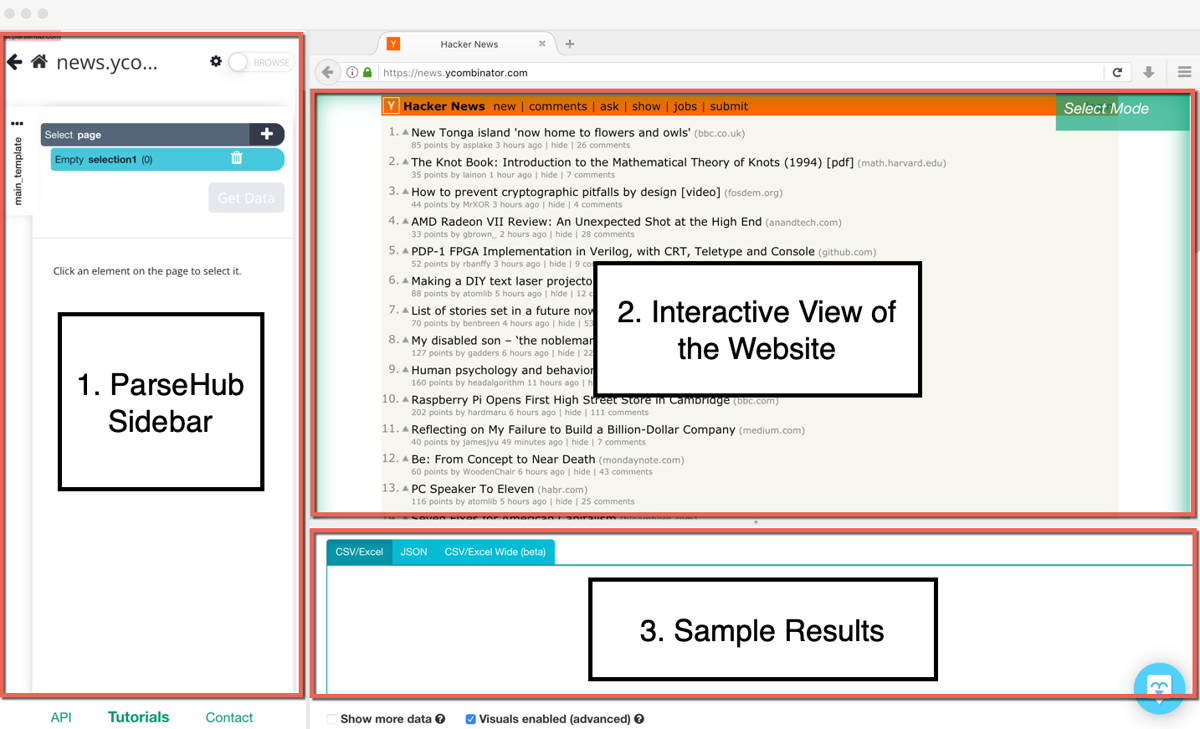

5. ParseHub

ParseHub is a desktop plus cloud hybrid scraper with a visual interface. You click on elements to capture data, and it handles pagination, dynamic content, and more.

ParseHub key features

- Point-and-click UI (no coding needed)

- Works with JavaScript, AJAX, infinite scroll

- Local and cloud runs with scheduling

- Data export as CSV, Excel, JSON, or via API

- Proxy rotation available on paid plans

ParseHub pros and cons

Pros

- Beginner-friendly, no coding required

- Can handle dynamic sites and JavaScript

- Cross-platform (Windows, macOS, Linux)

- Multiple export options

Cons

- Free plan is very limited

- Advanced features require paid plans

- Slower on large or complex scraping jobs

- Development and feature updates appear slow

ParseHub pricing

ParseHub has a limited free tier. Paid plans start at $189/month, with higher tiers for bigger projects and enterprise use.

ParseHub best use case

ParseHub is suitable for small to medium scraping tasks where you want a simple, visual setup without coding. It's good for non-developers or small teams needing to get data quickly without building infrastructure.

6. Scrapy

Scrapy is a powerful open-source web crawling framework written in Python. It works asynchronously, making it well-suited for scraping large volumes efficiently.

Scrapy key features

- Asynchronous and concurrent requests for speed

- Built-in support for CSS selectors, XPath, and parsing logic

- Modular architecture: spiders, middlewares, pipelines

- Extensible with plugins and middleware

- Can integrate with headless browsers (Scrapy-Playwright, Scrapy-Splash) to handle JavaScript

- Strong community support and detailed documentation

Scrapy pros and cons

Pros

- Highly flexible and customizable

- Scales well for large projects

- Actively maintained with a big community

- Clean, modular architecture

Cons

- Requires Python programming skills

- Needs extra setup for JavaScript-heavy sites

- More complex than plug-and-play APIs

Scrapy pricing

Scrapy is free and open-source. There are no licensing costs.

Scrapy best use case

Scrapy is best for developers who need full control and scalability. It's ideal for large crawls, custom workflows, and advanced scraping projects where coding is not an obstacle.

7. Diffbot

Diffbot offers a suite of AI-powered web APIs that convert pages into clean, structured data. It goes beyond scraping — it adds NLP, entity recognition, and graph relations.

Diffbot key features

- Extract API that uses computer vision + NLP to parse pages automatically into JSON

- Crawl API for spidering sites and supplying links to Extract

- Bulk Extract API for processing many URLs asynchronously

- Knowledge Graph / Enhance API to enrich your existing data

- Support for articles, products, discussions, images, videos

- Built-in sentiment, entity classification, relationship linking

Diffbot pros and cons

Pros

- High level of automation with minimal rule setup

- Outputs rich structured data with relationships and entities

- Good for content intelligence and NLP tasks

Cons

- Significantly more expensive than basic scraping APIs

- Crawling and advanced features require higher-tier plans

- Not every site is parsed perfectly — edge cases may need fallback tools

Diffbot pricing

Diffbot includes a free tier with around 10,000 credits/month. Paid plans start at $299/month (Startup, 250k credits) and scale up to $899/month and higher for advanced features and enterprise usage.

Diffbot best use case

Use Diffbot when you want more than raw data — when your project involves content analysis, entity extraction, or building a knowledge graph. It's best for analysts, research teams, and developers working on AI/ML applications that need structured intelligence.

Quick comparison of the best web scraping tools in 2025

| Tool | Type / Mode | Key strengths | Limitations | Pricing (starting) | Best for |

|---|---|---|---|---|---|

| ScrapingBee | API-based SaaS | Reliable, handles JS, proxies & CAPTCHAs, scalable | Some requests consume many credits | $49/month | Developers, teams needing a stable API |

| Decodo | API + proxy infra | Large IP pool, anti-bot bypass, flexible options | Pricing scales quickly, tier complexity | ~$0.32/1k requests (lower tiers) | Marketing, analytics, teams avoiding infra hassle |

| ScraperAPI | API-based SaaS | Easy integration, abstracts proxies/CAPTCHAs, JSON output | Less granular control, credit spikes on hard pages | $49/month | Quick plug-and-play scraping for devs |

| Octoparse | No-code SaaS + desktop | Visual builder, templates, cloud mode, JS support | Costly at scale, struggles on complex anti-bot sites | Free / $83+ per month | Non-coders, small to medium projects |

| ParseHub | No-code desktop + cloud | Works with JS sites, simple UI, multiple export formats | Slower for big jobs, limited free plan | Free / $189+ per month | Smaller projects, non-devs |

| Scrapy | Open-source Python framework | Full control, modular, async, extensible | Requires coding, manual setup for proxies/JS | Free | Developers, custom large-scale crawls |

| Diffbot | AI / structured-data API | Automatic structured data, NLP, Knowledge Graph | Expensive, credit pricing complex | $299+ per month | Analysts, AI/ML, knowledge graph building |

Conclusion

Choosing the right tool depends on your project. No-code platforms like Octoparse or ParseHub are great for beginners. Frameworks like Scrapy give full control to developers. Premium options like Diffbot add AI and analysis features.

But if you want a balanced solution in 2025 — reliable, scalable, and fairly priced — ScrapingBee is the clear winner. It takes care of proxies, CAPTCHAs, and rendering so you can focus on the data.

👉 Start your free trial today and see why ScrapingBee is the top choice for web scraping in 2025.

Frequently asked questions

What are the key features to look for in a web scraping tool?

Look for proxy rotation, JavaScript rendering, CAPTCHA handling, scalability, and flexible data export formats (JSON, CSV, Excel). Good documentation and support also matter.

How do API-based scraping tools differ from visual scraping tools?

API-based tools (like ScrapingBee) let developers send requests programmatically and get structured results. Visual tools (like Octoparse or ParseHub) use point-and-click interfaces and are better for non-coders.

Are there any free web scraping tools available?

Yes. Scrapy is free and open-source. Octoparse and ParseHub also have limited free tiers. But free plans usually have strict limits on speed, volume, or features.

How do web scraping tools handle anti-bot protection on websites?

They use tactics like rotating proxies, mimicking real browsers, rendering JavaScript, and solving CAPTCHAs automatically. Advanced tools combine these to avoid blocks.

What factors should I consider when choosing a web scraping tool?

Consider your coding skills, project scale, budget, and the type of sites you want to scrape. If you need scalability and balanced pricing, API-based services like ScrapingBee are usually the best fit.

Before you go, check out these related reads:

Kevin worked in the web scraping industry for 10 years before co-founding ScrapingBee. He is also the author of the Java Web Scraping Handbook.