Are you a marketer tracking competitor prices? A content creator monitoring trending topics? Maybe you're a small business owner researching leads or a data analyst gathering insights from websites? If any of these describe you, you're in the right place!

No-code platforms like n8n are changing how we handle repetitive data collection tasks. What used to require hiring developers or spending hours on manual copying can now be automated with visual workflows in minutes.

The rise of AI-powered web scraping has made this even more accessible. Instead of wrestling with complex code or brittle CSS selectors, you can now describe what data you want in plain English and let AI handle the extraction.

That's exactly why this tutorial exists. Today, I'm walking you through building an intelligent link-crawling bot using n8n and our AI Web Scraping API.

Why You Need This N8N Web Scraping Strategy

After building custom Python scrapers for years and dealing with constant maintenance headaches, I was desperately searching for something more sustainable.

N8n stood out because it actually handles complex workflows without falling apart, and when you pair it with our AI Web Scraping API, it becomes incredibly powerful.

Now, we'll build a workflow that crawls through multiple website pages, extracts H1 tags using AI, and outputs clean JSON data. No servers to maintain, no code to debug, and no more manual copying.

Think of it as your personal web scraping apprenticeship, minus the years of trial and error.

By the end of this tutorial, you'll have a fully functional n8n workflow that crawls website pages, follows internal links, and extracts H1 tags from each page. Let's dive in!

Getting Started With N8N in 2 Quick Steps

Before we start building our web scraping workflow, you'll need accounts on both platforms. Don't worry - both offer generous free tiers that'll cover everything in this tutorial.

Step 1: Creating Your N8N Account (Free Tier Gets You Started)

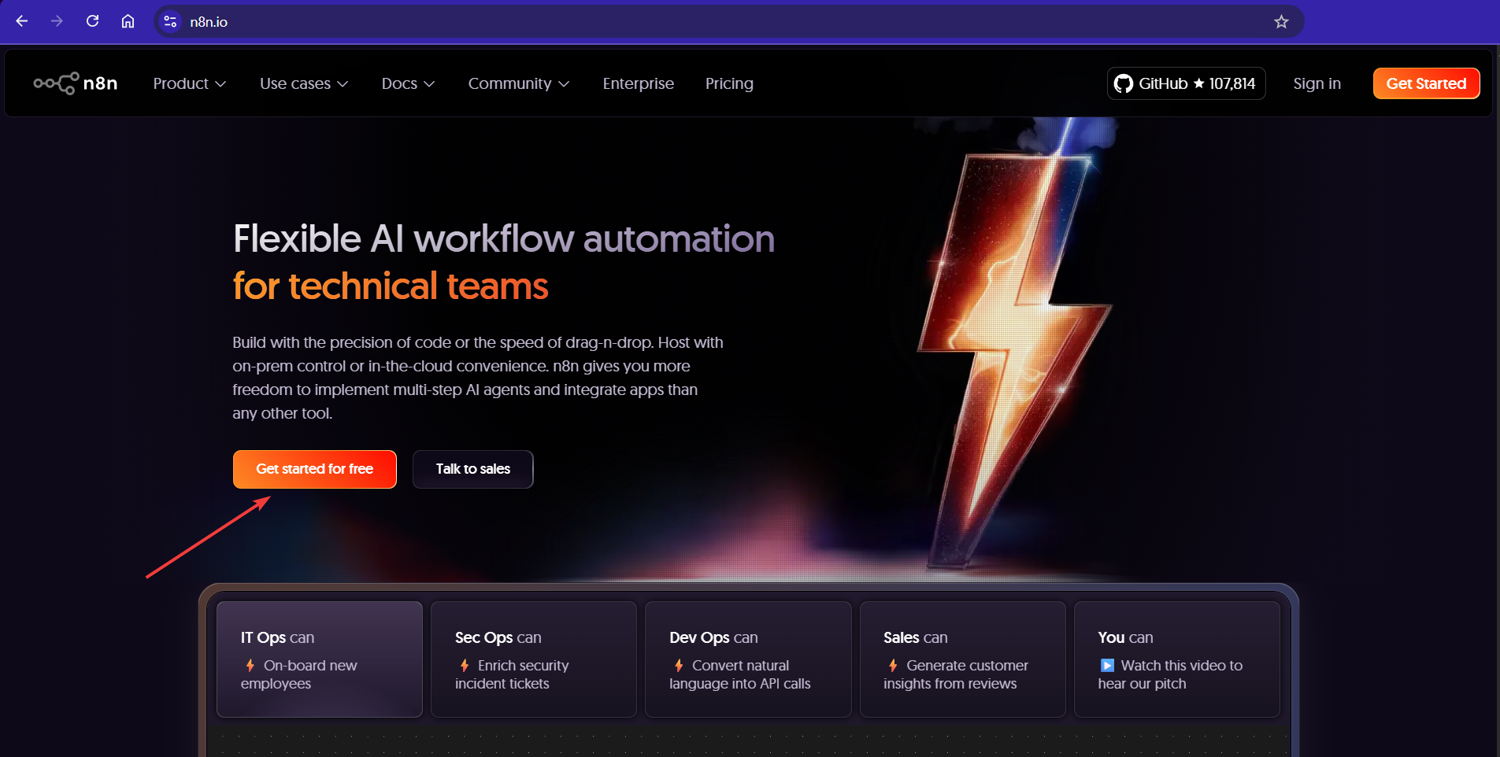

Head over to n8n.io and click the "Get started for free" button:

Once you're in, n8n will show you their workflow canvas. This is where the magic happens - think of it as your visual programming interface where you'll drag and drop nodes instead of writing code.

Step 2: Getting Your ScrapingBee API Key (1000 Free API Calls)

To help you access problematic websites, we'll use the ScrapingBee's web scraping API, which employs advanced technology to bypass anti-bot systems like Cloudflare and Datadome.

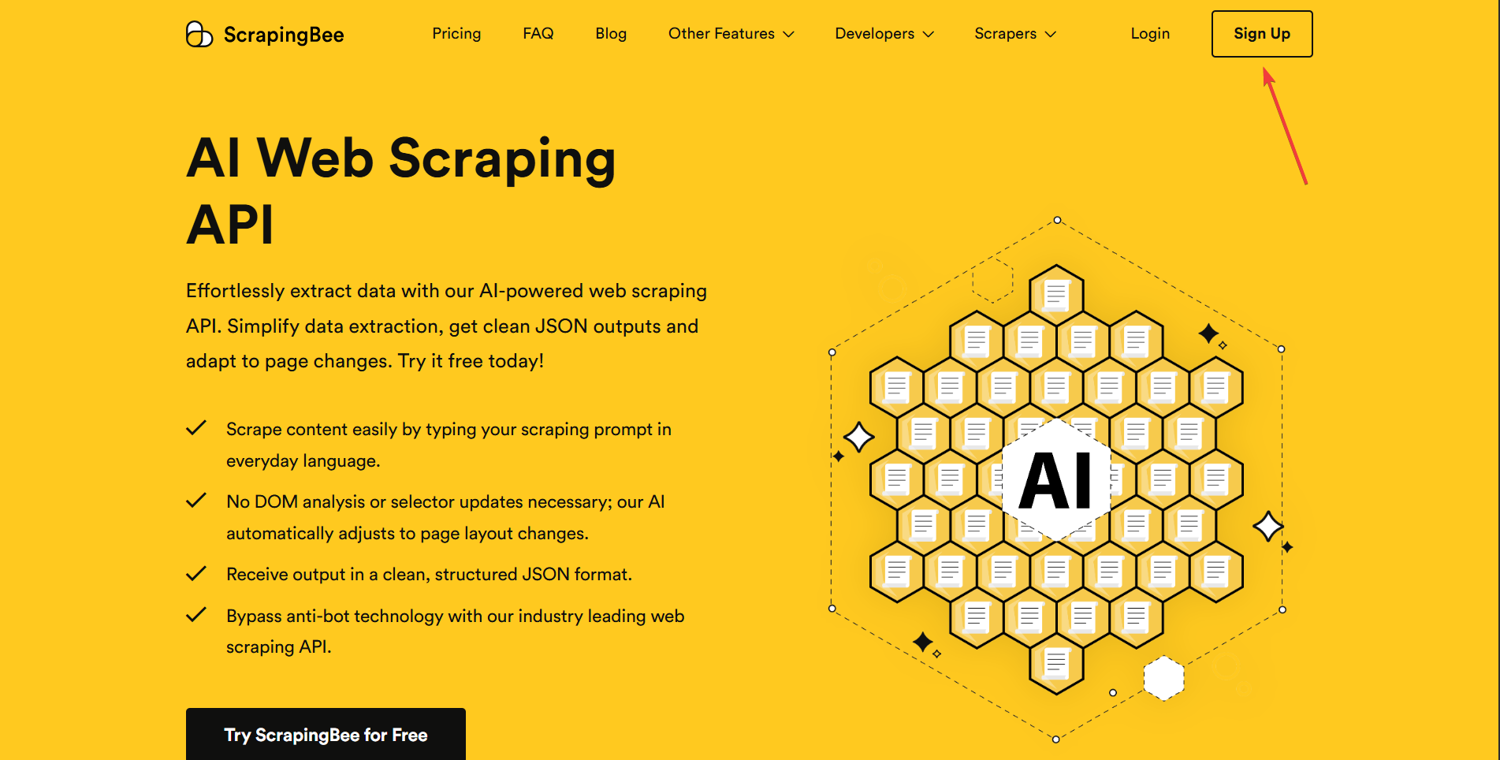

See that "Sign up" button at the top right of this page? Click it! We offer 1,000 free API calls - more than enough to test your workflow and scrape hundreds of pages.

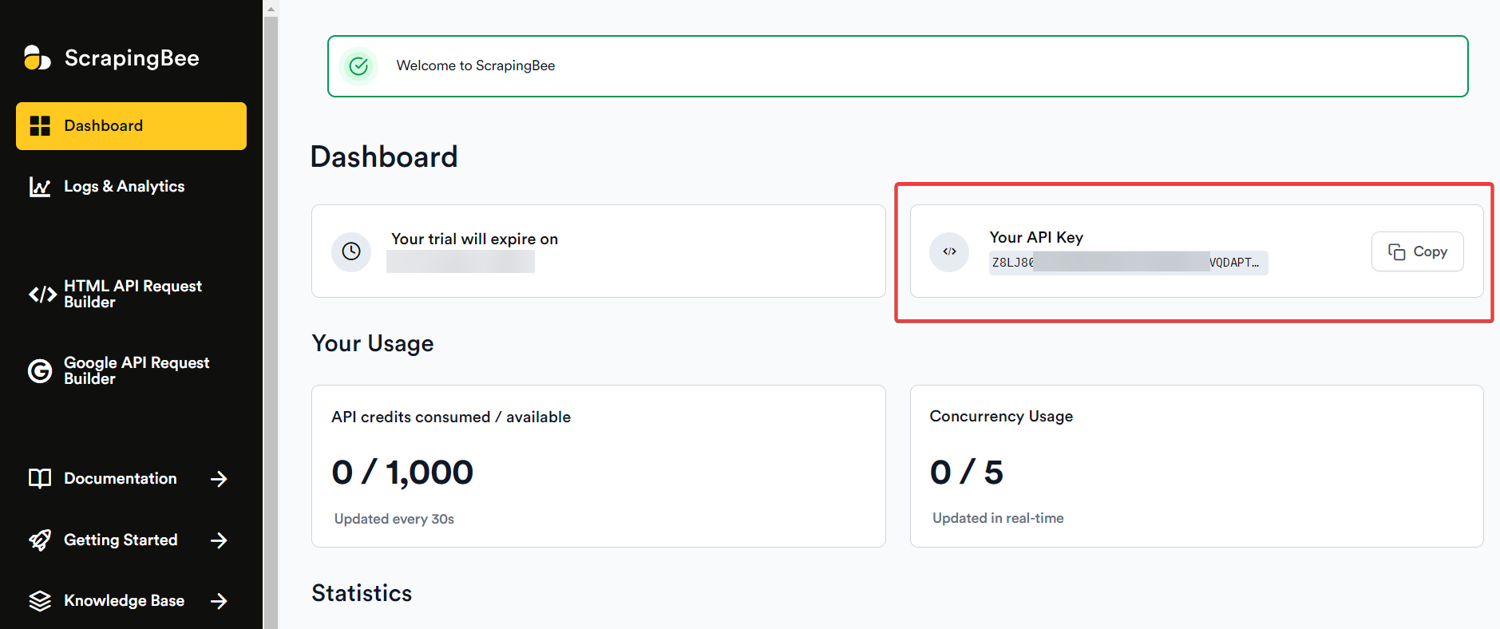

After signing up, navigate to your dashboard and copy your API key (you'll need this in subsequent steps):

And there you go! Keep this API key handy – you can always copy your API key from the dashboard!

Pro Tip: Never share your API key publicly or commit it to version control platforms like GitHub.

With both accounts ready and your ScrapingBee API key secured, it's time to roll up our sleeves and build something awesome. Let's head back to n8n and create our web scraping powerhouse!

Building Your N8N Web Scraping Workflow in 7 Easy Steps

Now comes the fun part - building the actual workflow in n8n.

In these steps, we'll create a simple but powerful 4-node setup that handles everything from triggering the scrape to processing the results. Let's start!

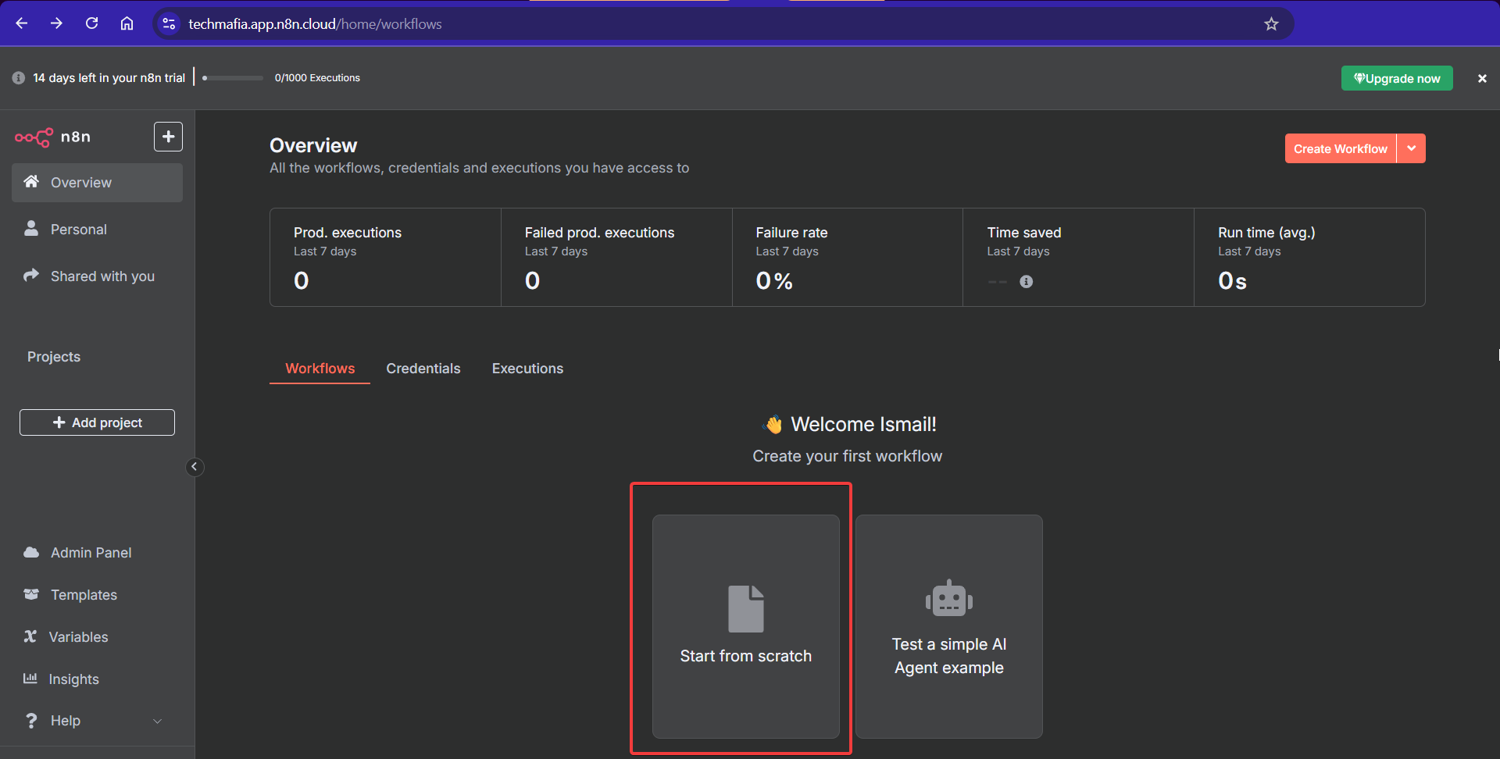

Step 1: Creating Your New Workflow

From your n8n dashboard, click on "Start from scratch" to create a fresh workflow canvas:

This gives you a clean slate to build our 4-node architecture that will handle everything from triggering the scrape to processing the results.

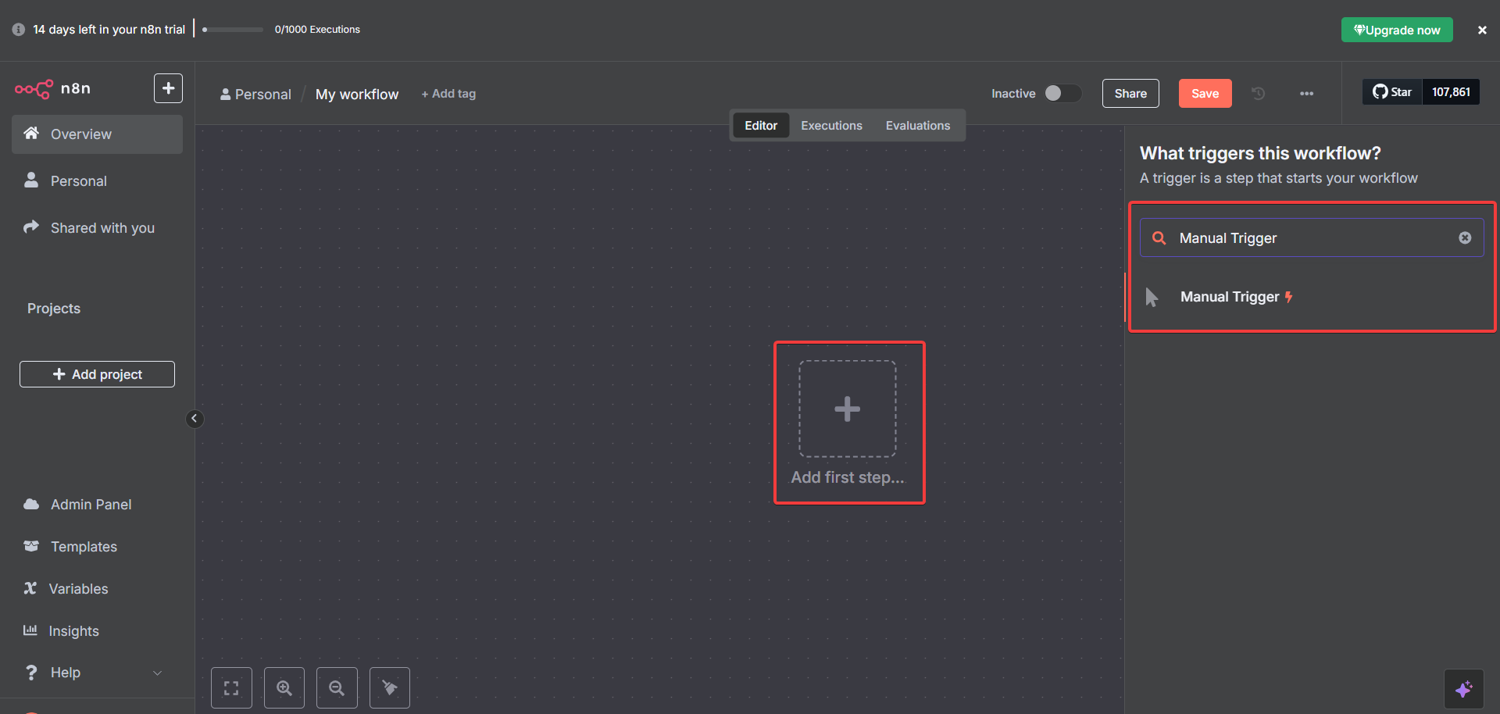

Step 2: Setting Up the Manual Trigger Node

In your n8n canvas, you'll see a "+" button. Click it and search for "Manual Trigger" - this node lets you start your workflow with a simple button click:

The Manual Trigger is perfect for testing because you control exactly when your scraping starts. No schedules, no complications - just click and scrape.

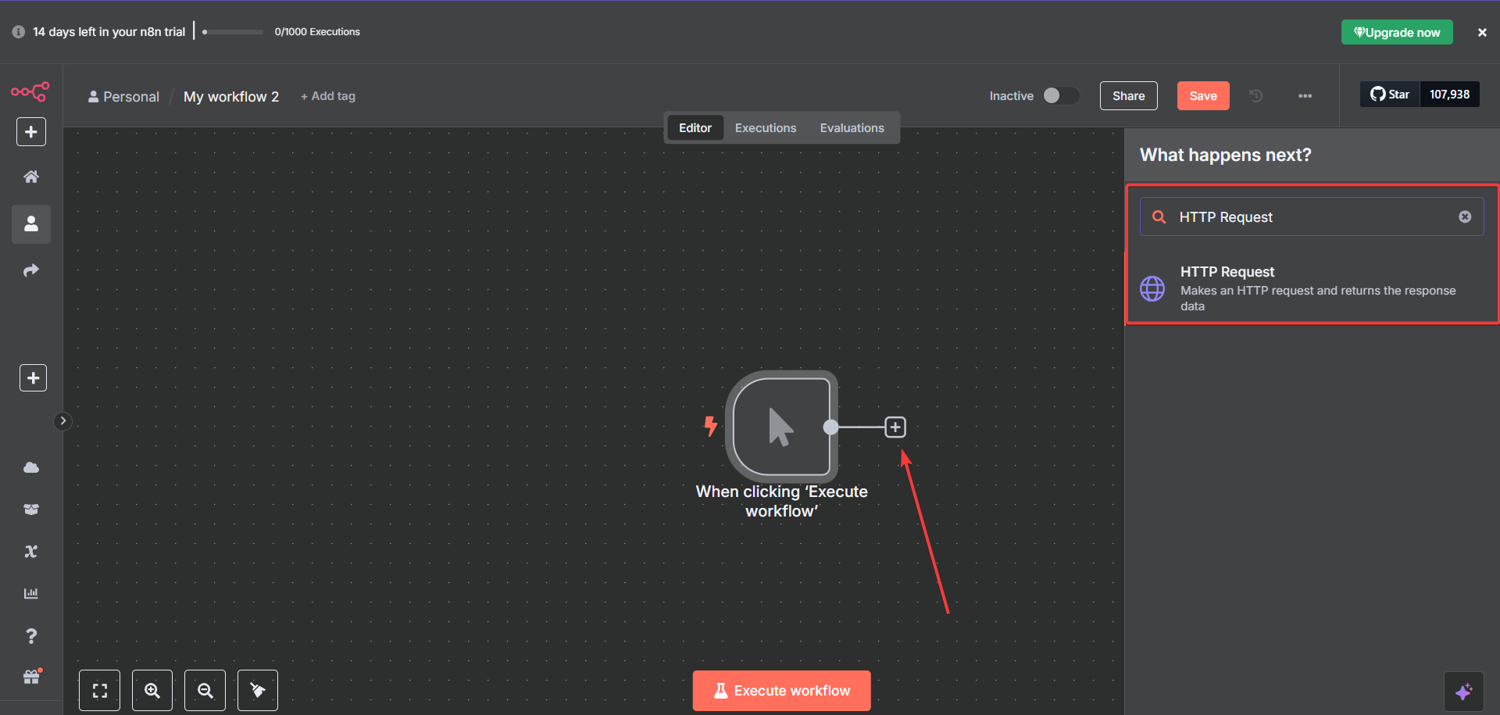

Step 3: Adding Your First HTTP Request Node

Next, add an "HTTP Request" node and connect it to your Manual Trigger. This is where we'll call our web scraping API:

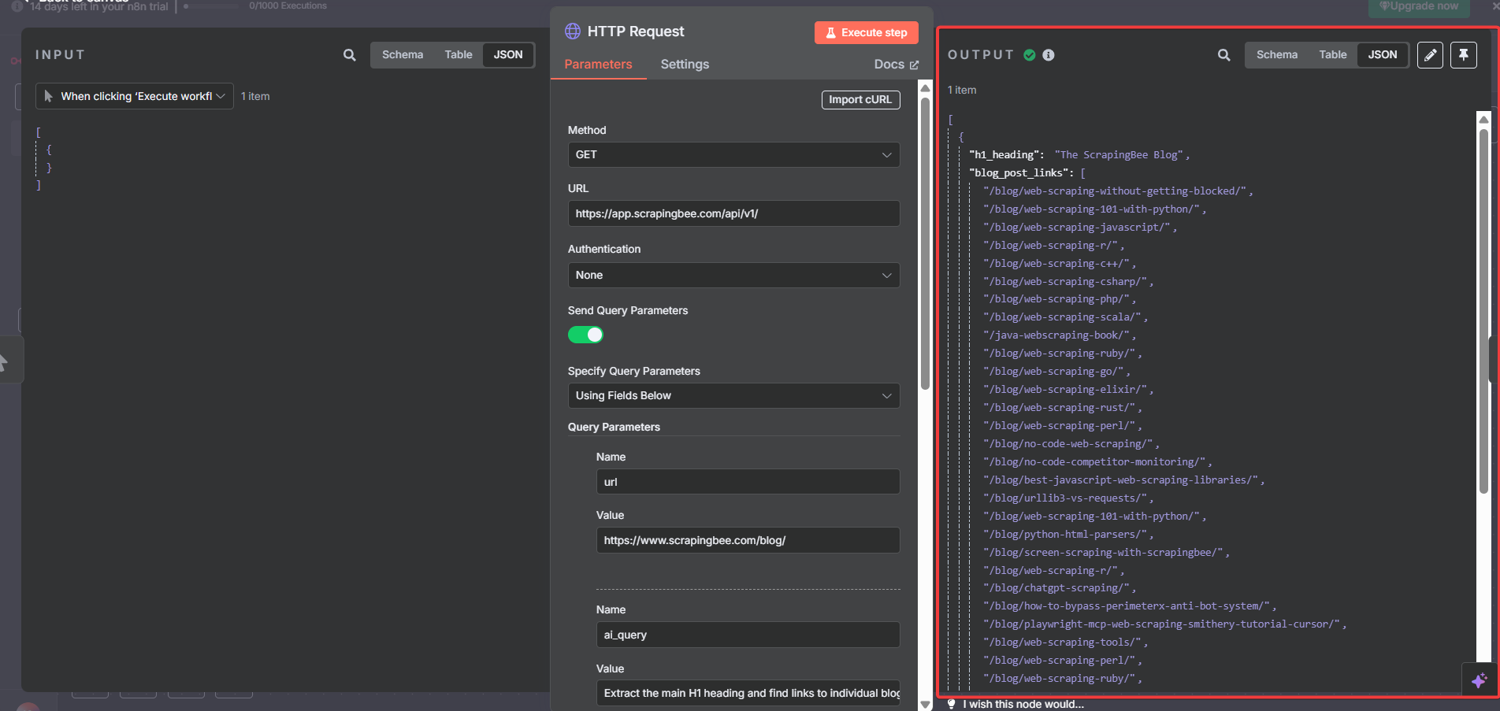

Configuring the HTTP Request Node for ScrapingBee API Integration

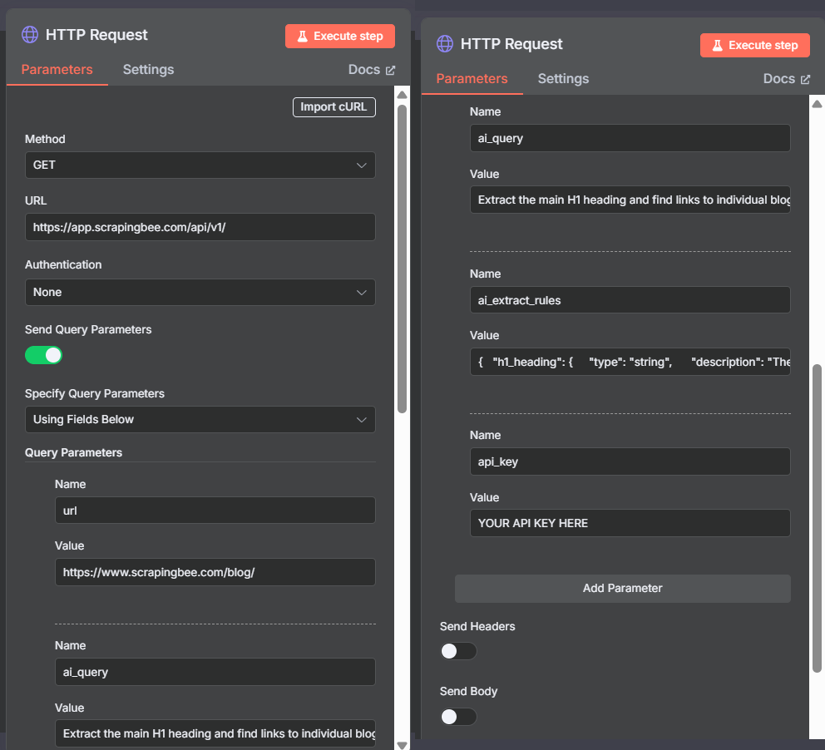

Click on your HTTP Request node to open its configuration.

Now, we need to add the configurations for our web scraping API integration. Here's exactly what to set:

Basic Settings:

- Method: GET

- URL: https://app.scrapingbee.com/api/v1/

- Authentication: None

- "Send Query Parameters" = ON

- "Specify Query Parameters" to "Using Fields Below"

Then, add these exact four parameters (click "Add Parameter" for each, in no particular order):

- Parameter 1:

- Name: api_key

- Value: [YOUR_SCRAPINGBEE_API_KEY]

- Parameter 2:

- Name: url

- Value: https://www.scrapingbee.com/blog/

[Note: This is just our example URL - replace it with any website you want to scrape!]

Why scraping our blog for this tutorial? We're using our own blog because we know every page has clear H1 headings to extract. Nothing's more frustrating than following a tutorial only to get empty results because the example site doesn't have the data structure you're looking for. With our blog, you're guaranteed to see real H1s and working results - perfect for learning the concepts before applying them to your target websites.

- Parameter 3:

- Name: ai_query

- Value: Extract the main H1 heading and find links to individual blog posts

- Parameter 4:

- Name: ai_extract_rules

- Value: {"h1_heading":{"type":"string","description":"The main H1 heading from the blog page"},"blog_post_links":{"type":"list","description":"URLs that link to individual blog posts on this site"}}

Important: Turn OFF Headers and Body

Here's where our workflow gets intelligent. Instead of wrestling with CSS selectors that break every time a website updates, we'll use natural language to tell our scraper exactly what we want.

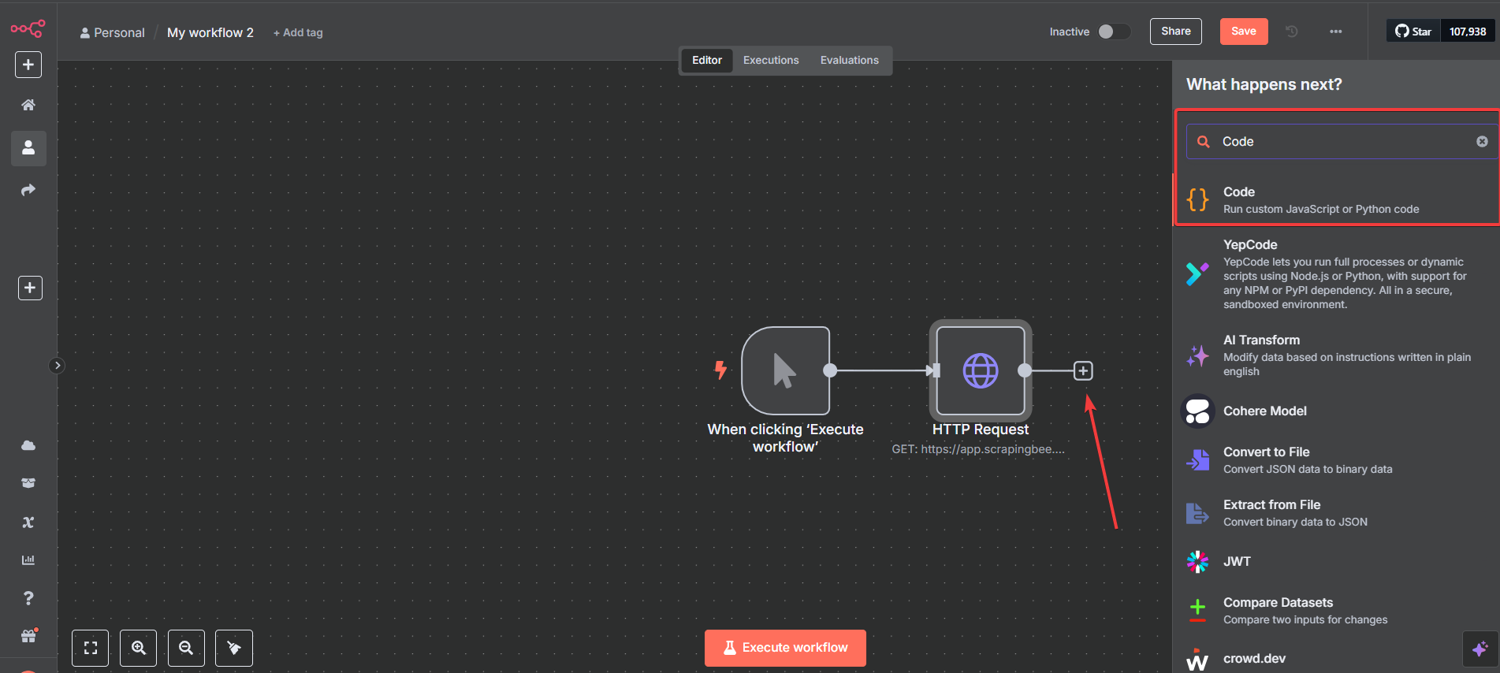

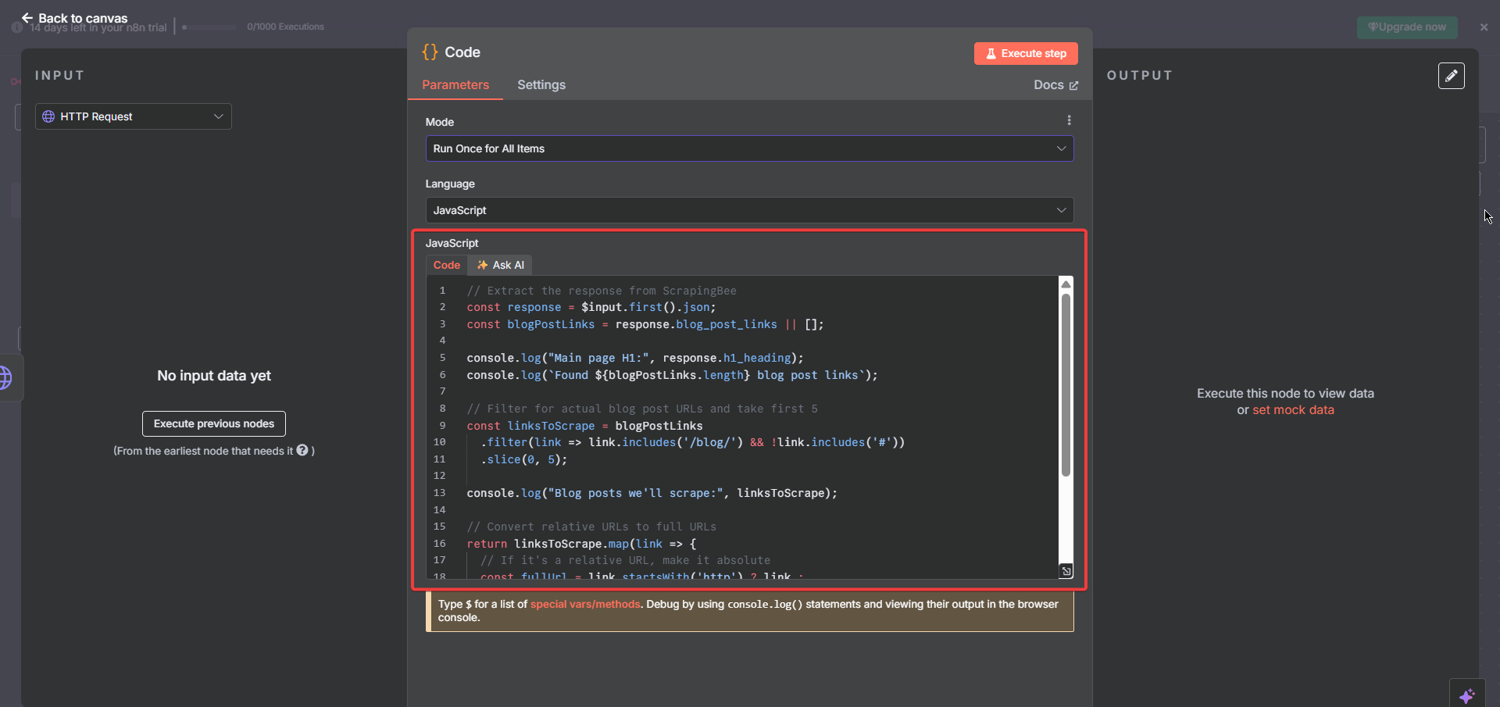

Step 4: Adding the Code Node for Link Extraction Logic

Next, in the canvas, add a "Code" node after your HTTP Request node. This processes the scraped data and prepares it for the next step (don't worry - I'll give you the exact code to copy-paste):

Click on your Code node and paste this exact JavaScript:

// Extract the response from ScrapingBee

const response = $input.first().json;

const blogPostLinks = response.blog_post_links || [];

console.log("Main page H1:", response.h1_heading);

console.log(`Found ${blogPostLinks.length} blog post links`);

// Filter for actual blog post URLs and take first 5

// You can replace 5 with your preferred number

const linksToScrape = blogPostLinks

.filter(link => link.includes('/blog/') && !link.includes('#'))

.slice(0, 5);

console.log("Blog posts we'll scrape:", linksToScrape);

// Convert relative URLs to full URLs

return linksToScrape.map(link => {

// If it's a relative URL, make it absolute

const fullUrl = link.startsWith('http') ? link : `https://www.scrapingbee.com${link}`;

return {

url: fullUrl,

type: 'blog_post'

};

});

This code does three critical things:

- Extracts the blog post links from ScrapingBee's response

- Filters them to get only actual blog posts (no anchors or external links)

- Converts relative URLs to full URLs

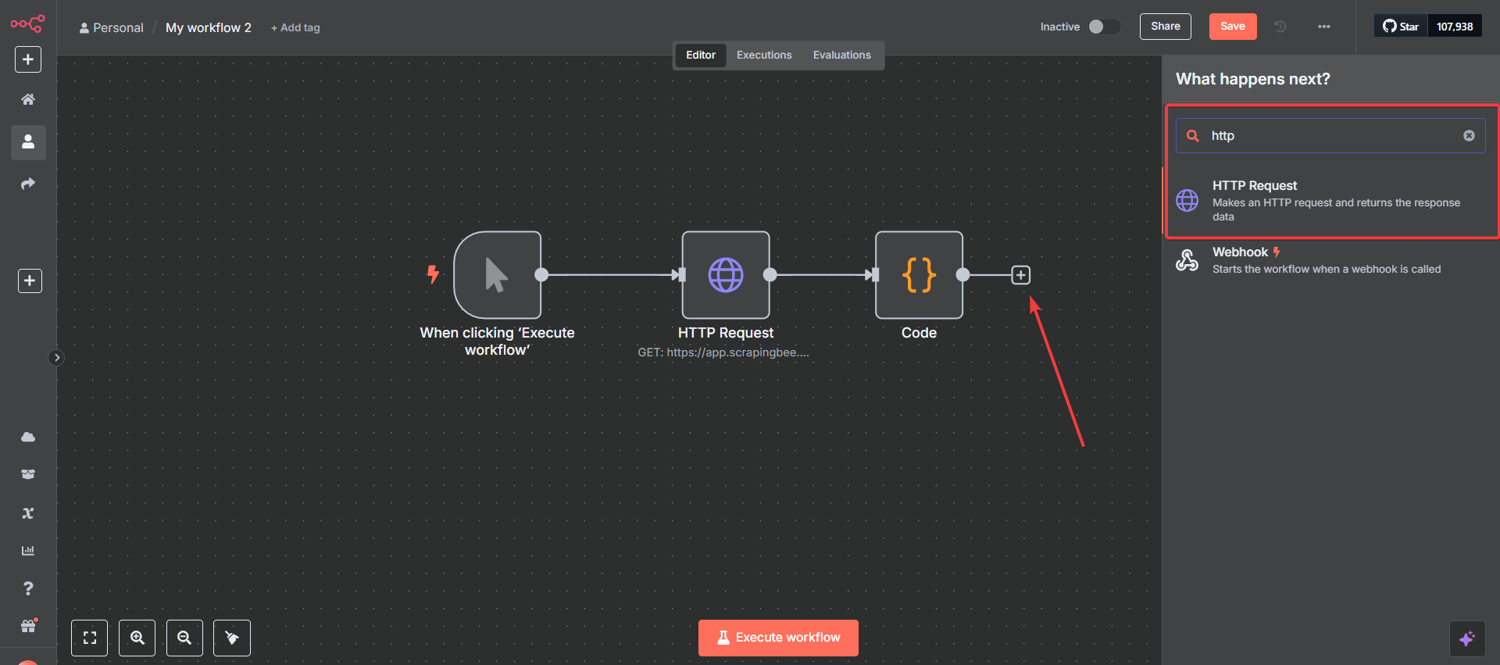

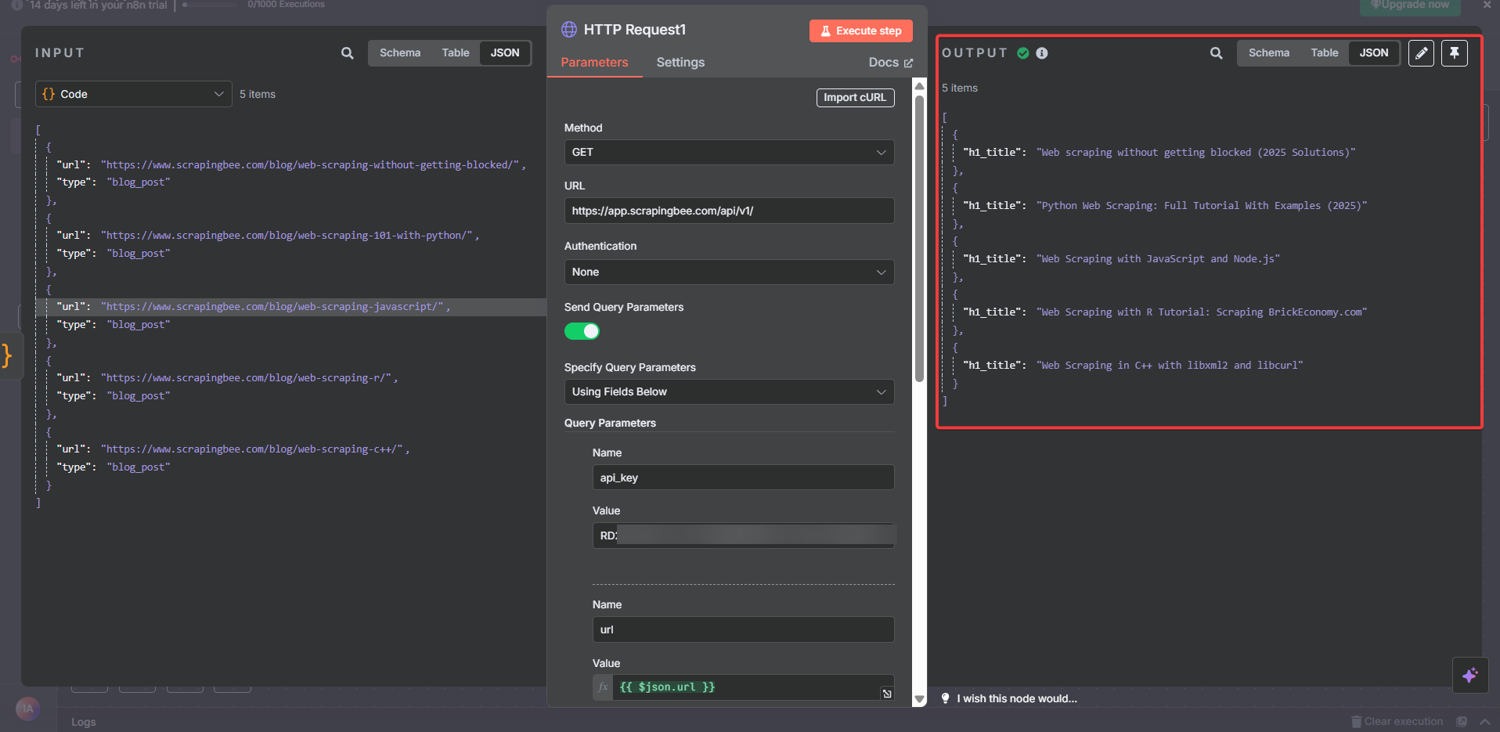

Step 5: Adding the Second HTTP Request Node (The Spider)

Now, we add another HTTP Request node after the Code node. This is what actually "spiders" the individual pages:

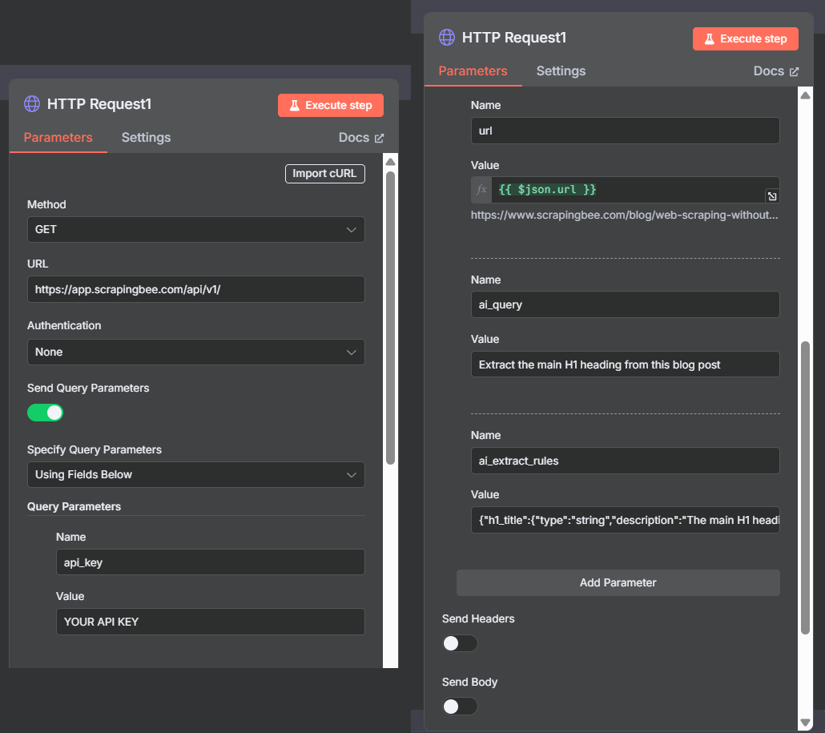

Configuring the Spider Node

This node is similar to the first, but with a key difference - it uses expressions to dynamically scrape each URL we've retrieved from the Code node output.

Basic Settings:

- Method: GET

- URL: https://app.scrapingbee.com/api/v1/

- Send Query Parameters: ON

Then, Query Parameters:

- Parameter 1:

- Name: api_key

- Value: [YOUR_SCRAPINGBEE_API_KEY]

- Parameter 2:

- Name: url

- Value: {{ $json.url }}

- Parameter 3:

- Name: ai_query

- Value: Extract the main H1 heading from this blog post

- Parameter 4:

- Name: ai_extract_rules

- Value: {"h1_title":{"type":"string","description":"The main H1 heading of this blog post"}}

The {{ $json.url }} expression tells n8n to use the URL from each item that the Code node outputs. This is what makes the spidering work!

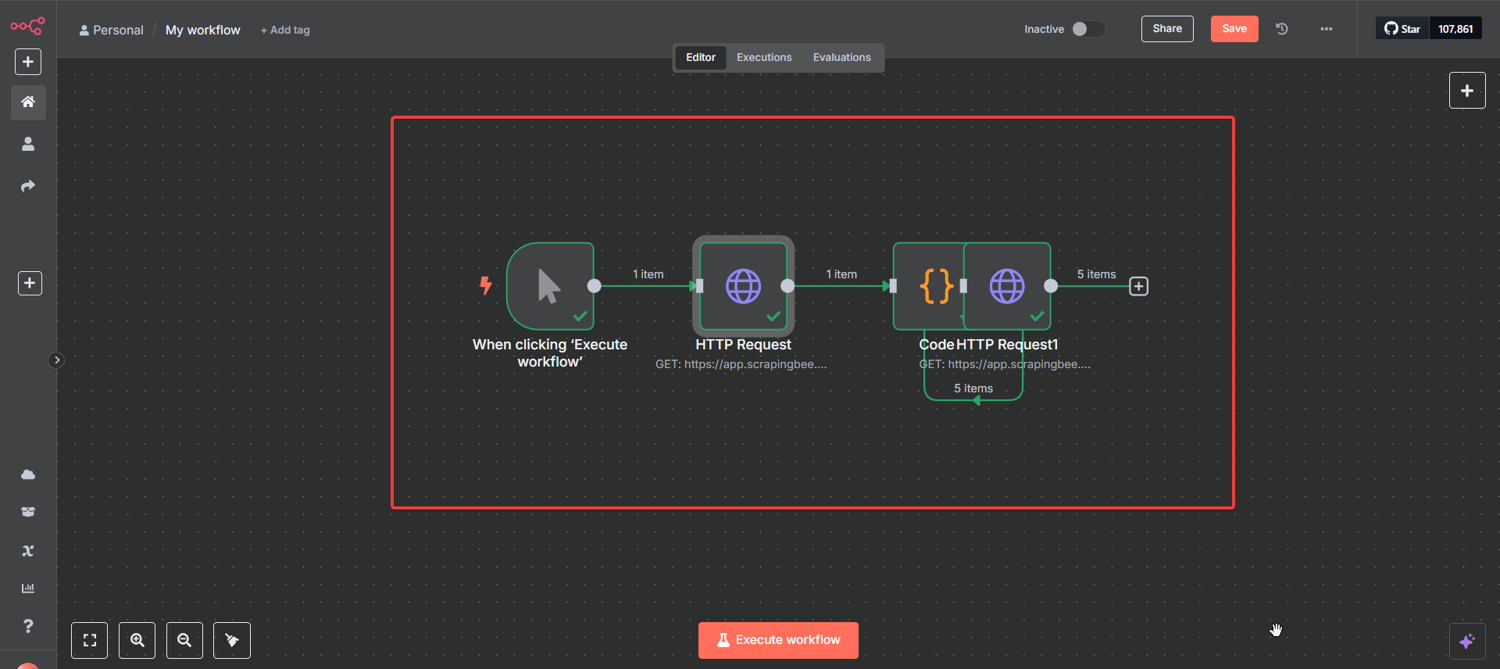

Step 6: Testing Your Web Scraping Spider

Now for the exciting part - let's see your spider in action! Your workflow should look like this:

Manual Trigger → HTTP Request → Code → HTTP Request

Running Your First Test

- Click the "Execute Workflow" button

- Watch the magic happen!

Pro Tip: In my experience, I always start with small tests like this. It's tempting to scrape 100 pages right away, but testing with 5 pages first saves hours of debugging later.

Step 7: Analyzing Your Spider Results

After execution, click on each node to see what happened:

First HTTP Request Results

Click on your first HTTP Request node. You should see:

json{

"h1_heading": "The ScrapingBee Blog",

"blog_post_links": [

"/blog/web-scraping-without-getting-blocked/",

"/blog/web-scraping-101-with-python/",

"/blog/web-scraping-javascript/",

...

]

}

Second HTTP Request Results (The Spider Success!)

Click on your second HTTP Request node. You should see 5 separate results - one for each blog post:

json{

"h1_title": "Web scraping without getting blocked (2026 Solutions)"

}

{

"h1_title": "Python Web Scraping: Full Tutorial With Examples (2026)"

}

{

"h1_title": "Web Scraping with JavaScript and Node.js"

}

...

Congratulations! 🎉 You've just built a web-scraping spider that:

- ✅ Scraped the main blog page

- ✅ Extracted the main H1 ("The ScrapingBee Blog")

- ✅ Found internal blog post links

- ✅ Automatically scraped 5 individual blog posts

- ✅ Extracted each post's unique H1 title

You've built something that would typically require:

- Complex web scraping libraries

- Proxy management

- JavaScript rendering setup

- Link extraction logic

- URL processing

- Error handling

Instead, you did it all with:

- ✅ 4 simple nodes in n8n

- ✅ Zero complex code (just basic JavaScript)

- ✅ AI-powered extraction (no CSS selectors to break)

- ✅ Built-in proxy handling (ScrapingBee manages this)

- ✅ Automatic JavaScript rendering (ScrapingBee handles it)

This is the power of combining n8n's visual workflow approach with our AI Web Scraping API - complex tasks become simple, visual workflows that anyone can understand and modify.

We handle the infrastructure, while you focus on what matters most - your business!

Beyond H1 Tags: Your Next No-Code Web Scraping Adventures

You've just built a smart web scraping workflow that would have taken weeks to code from scratch. But this is only the beginning!

The same n8n + ScrapingBee approach opens up a world of no-code automation possibilities - from monitoring competitor prices to tracking job listings, from updating spreadsheets to sending alerts to your team:

| Article | Description |

|---|---|

| How to scrape websites with Google Sheets | Skip the complex workflows! Learn to scrape data straight into spreadsheets with simple formulas. Excel users welcome, Google Sheets preferred. |

| Scrape Amazon products' price with no code | Because manually checking competitor prices is so 2019. Automate Amazon product monitoring and never miss a price drop again. |

| Extract Job Listings from Indeed with Make.com | Turn job hunting into job hunting automation. Extract listings, salaries, and details without the soul-crushing manual browsing. |

| No-code web scraping | The comprehensive guide to building powerful scrapers without touching code. Like this tutorial, but for everything else on the internet. |

| Send stock prices to Slack with Make | Get market updates delivered to your team chat. Because refreshing Yahoo Finance 50 times a day isn't a sustainable strategy. |

| No-code competitor monitoring | Keep tabs on your competition automatically. Know when they change prices, launch features, or update their messaging - all while you sleep. |

Whether you want to scrape data directly into Google Sheets, monitor Amazon prices, extract job postings, or set up automated competitor analysis, the visual workflow approach you just mastered scales to handle complex business use cases with zero coding required.

The combination of n8n's visual workflow builder and our AI Web Scraping API eliminates the traditional barriers to automated data collection. No more brittle selectors, no more maintenance headaches - just describe what you want and let our AI handle the rest.

Welcome to the future of no-code and AI Web Scraping!

Before you go, check out these related reads: