Having a Langchain scraper enables developers to build powerful data pipelines that start with real-time data extraction and end with structured outputs for tasks, like embeddings and retrieval-augmented generation (RAG). To accommodate these benefits, our HTML API simplifies the road towards desired public content via JavaScript rendering, anti-bot bypassing, and content cleanup—so LangChain can process the result into usable text.

In this guide, we will cover the steps and integration details that will help us combine LangChain with our Python SDK, combining these two tools in a Python project. Let's get straight to it!

Quick Answer (TL;DR)

Below is a full Python script for data scraping the YellowPages platform and restructuring it with Langchain while utilizing protected and optimized connections in our HTML API. Feel free to copy it and tweak it to adjust for your desired use cases.

To explore alternative solutions, check out our post about JavaScript scraping.

#Importing our HTML API

from scrapingbee import ScrapingBeeClient

from bs4 import BeautifulSoup

# An internal Python library to integrate input parameters into a URL string

from urllib.parse import urlencode

# Importing additional Langchain splitter

from langchain.text_splitter import RecursiveCharacterTextSplitter

# Initializing our API client in the "client" variable

client = ScrapingBeeClient(api_key='YOUR_API_KEY')

base = "https://www.yellowpages.com/search"

search_term = input("Search_term: ").strip()

location_term = input("Location_term: ").strip()

params = {"search_terms": search_term, "geo_location_terms": location_term}

url = f"{base}?{urlencode(params)}"

#print(url)

def parse_html_with_metadata(html, url):

soup = BeautifulSoup(html, 'html.parser')

text = soup.get_text(separator=' ', strip=True) # Extracts visible text

title = soup.title.string if soup.title else 'No Title'

metadata = {'source': url, 'title': title}

return text,title,metadata

def scrape_Yellow_Pages():

js_scenario = {

"instructions": [

{"wait": 3000}

]

}

# For loop going through the list of URLs generated by your input

response = client.get(

url,

params={

"js_scenario": js_scenario,

'block_resources': 'True',

'country_code': 'us',

}

)

result=response.text

text, title, metadata = parse_html_with_metadata(result, url)

# print(text)

# Initialize RecursiveCharacterTextSplitter with desired chunk_size and chunk_overlap

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

# Split the text into chunks

splitted_texts = text_splitter.split_text(text)

print(f"Title: {title}")

print(f"Metadata: {metadata}")

print(f"Number of chunks: {len(splitted_texts)}")

for i, chunk in enumerate(splitted_texts):

print(f"\n--- Chunk {i+1} ---\n{chunk}")

scrape_Yellow_Pages()

Why Use ScrapingBee With LangChain

Our API strengthens your LangChain pipeline by handling the web scraping layer that LangChain doesn’t cover. While it focuses on structuring and processing text, we handle fluid connections and access to extractable content. Our Python SDK automatically renders JavaScript, rotates proxies, sets geo-targeted IPs, and blocks unnecessary resources for faster loading. We also enable control over rate limits, and our tools allow you to bypass anti-bot features without requiring complex setups like Selenium or Puppeteer.

What You Need Before Starting

To perform the steps in this tutorial, you will need:

Python 3.6 or newer

Our API key

Python libraries: scrapingbee, beautifulsoup4, langchain

To learn more about how our SDK works, check out ScrapingBee documentation.

Step-by-Step: Web Scraping With LangChain & ScrapingBee

LangChain can manage data pipelines, but it needs a reliable scraper to feed it. Our API simplifies extraction by rendering JavaScript, rotating connections via proxy connections, and returning clean HTML that LangChain can process into documents for downstream use in RAG pipelines or embeddings.

To test our web scraper API combined with our tools and LangChain and retrieve structured information, we will test our solution on one of the best web sources for scraping – Yellowpages.com.

1) Install packages and set API key

Before we begin, make sure that you have Python (3.6 version or newer) installed on your device. You can download it via Microsoft Store or straight from the website – Python.org.

Then, using your Terminal or Command Prompt, type in the following command to download and install external libraries with pip – Python's package manager:

pip install scrapingbee langchain beautifulsoup4

LangChain will manage the processing, knowledge extraction from the fetched web content, while Beautifulsoup will parse the HTML to only retrieve text, and our Python SDK will take care of fluid data extraction. Next, create or sing in to your ScrapingBee account. New users get a 7-day free trial of 1,000 credits to test your API requests.

After signing up, copy your API key from the dashboard. Then, create an appropriate project directory and create an empty text file with a .py extension. This will be our data collection script.

2) Fetch HTML with ScrapingBee

To avoid confusion, first, we will create a script that accepts user input and then extracts raw HTML content from the site without advanced features. Begin writing the script, we import our Python SDK (we will add Langchain later), BeautifulSoup, and the "urlencode" library for building links towards the desired content. After that, create a "client" variable that will contain your API key:

#Importing our HTML API

from scrapingbee import ScrapingBeeClient

# Initializing our API client in the "client" variable

client = ScrapingBeeClient(api_key='YOUR_API_KEY')

Then, the next section will accept the YellowPages business type and location to construct a URL for extraction:

base = "https://www.yellowpages.com/search"

search_term = input("Search_term: ").strip()

location_term = input("Location_term: ").strip()

params = {"search_terms": search_term, "geo_location_terms": location_term}

url = f"{base}?{urlencode(params)}"

Now we can start working on the content aggregation function. Then we create the "js_scenario" variable, which contains JavaScript rendering instructions: wait for 3 seconds for the page to load its content before extracting.

Note: JavaScript rendering is enabled because the hidden variable render_js is set to "true" by default.

Then, create a "response" variable that will store the contents of the GET API call. In its definition, we have two parameters:

js_scenario: Our pre-written instructions to run JavaScript on the page before extracting to handle dynamic content.

block_resources: Option to block loading images, CSS, and fonts to speed up scraping by preventing unnecessary resource downloads.

country_code: Specifies the ISO country code to route your request through a proxy located in that country, helping access region-specific or geo-restricted content.

def scrape_Yellow_Pages():

js_scenario = {

"instructions": [

{"wait": 3000}

]

}

# For loop going through the list of URLs generated by your input

response = client.get(

url,

params={

"js_scenario": js_scenario,

'block_resources': 'True',

'country_code': 'us',

}

)

Finish up the script by printing out the result and invoking the finished function at the end:

result=response.text

print(result)

scrape_Yellow_Pages()

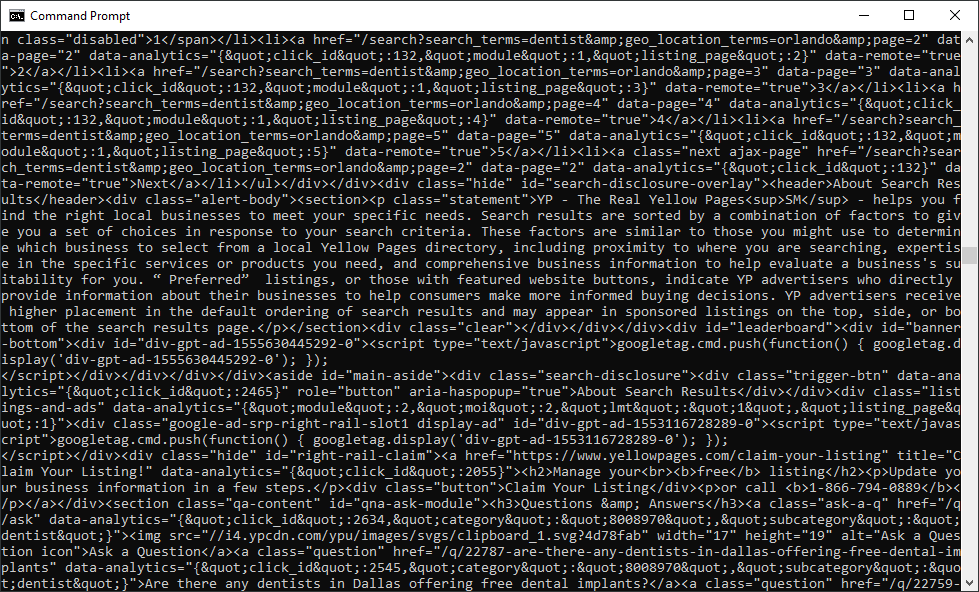

If extraction is successful, you should retrieve raw HTML data in your Terminal or Command Prompt. Here is the result of a script that looks for dentists in Orlando:

3) Parse HTML into LangChain Documents

To parse the HTML, we add a new function before the "scrape_yellowpages" definition, which will remove syntax elements and only leave its contents:

# content parsing function

def parse_html_with_metadata(html, url):

soup = BeautifulSoup(html, 'html.parser')

text = soup.get_text(separator=' ', strip=True) # Extracts visible text

title = soup.title.string if soup.title else 'No Title'

metadata = {'source': url, 'title': title}

return text,title,metadata

After that, we invoke the function within the main scraping logic, right after retrieving the result variable, with two parameters: the extracte

result=response.text

# parsing function returns 3 variables so we assign them to new variables within the function

text, title, metadata = parse_html_with_metadata(result, url)

print(text)

Now, once we run the script with the same input parameters, the scraped data should look a lot cleaner:

4) Split text into chunks

Once we have the text without HTML markup, we can start implementing Langchain to split it into chunks and retrieve structured data. First, go to the top of the script and import Langchain's "RecursiveCharacterTextSplitter":

# Importing additional Langchain splitter

from langchain.text_splitter import RecursiveCharacterTextSplitter

Then, right after the invocation of the parsing function, add a variable that will work as your splitter:

# Initialize RecursiveCharacterTextSplitter with desired chunk_size and chunk_overlap

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

The "splitted_texts" variable runs the splitter on our parsed content in the "text" variable:

splitted_texts = text_splitter.split_text(text)

To see our results, we print out all extracted structured data. The chunks themselves are structured in a list, so we run a for loop to go through them all:

# Split the text into chunks

splitted_texts = text_splitter.split_text(text)

print(f"Title: {title}")

print(f"Metadata: {metadata}")

print(f"Number of chunks: {len(splitted_texts)}")

for i, chunk in enumerate(splitted_texts):

print(f"\n--- Chunk {i+1} ---\n{chunk}")

After running the code, we can see that the final result of our scraping process extracts information that is ready for data analysis:

Advanced Options That Matter

With the help of our configuration parameters, you can send GET API requests and retrieve data without worrying about rate limits and other common web scraping challenges. To continue extracting data from web pages, we have tools for concurrency, retry logic, resource blocking, and regulatory compliance, ensuring speed, control, and resilience to anti-scraping mechanisms.

Data scrapers that use our Python SDK can handle concurrency using concurrent. Futures library, which is ideal for tasks like HTTP requests. This works well because the SDK uses the blocking requests library. For more details, check out our blog on how to make concurrent requests in Python.

On top of that, our API supports automatic retries for both the GET API calls and js_scenario execution, but the behavior depends on the type of error and how you configure the request. Also, if failed requests return a 500 HTTP status code, the attempt will not charge you any credits.

Our API also supports advanced resource blocking, letting you skip images, CSS, or tracking scripts that slow down response times or trigger bot protection. This saves bandwidth, speeds up page loads, and avoids unnecessary noise in your results. We already used this option in the parameters for our API call.

Finally, we’re fully GDPR compliant and ensure all requests pass through secure, encrypted proxies. This should give you peace of mind when scraping large volumes with minimal setup and without running afoul of privacy regulations.

Ready to scrape with LangChain? Get your ScrapingBee API key, copy the TL;DR script, and run it today. Scale up with JavaScript rendering, headers, cookies, and country targeting. ScrapingBee takes care of scraping so you can focus on building with LangChain.

Frequently Asked Questions (FAQs)

Can LangChain scrape JavaScript-heavy sites?

Not by itself. You will need our Python SDK or an alternative tool to fetch and render JavaScript content. LangChain handles information processing after the data is retrieved.

How do I avoid getting blocked?

You can avoid IP restrictions by using our built-in proxy rotation parameters, JavaScript rendering, and resource blocking to mimic real user behavior and bypass bot detection.

How much does this cost to run?

The amount of charged credits depends on the configuration of parameters within your GET API call. Check out our documentation page to see the credit costs for all features.

Can I build a RAG system with this data?

Yes! The structured chunks returned by LangChain after using ScrapingBee can be used to populate vector databases and feed into RAG pipelines.

Kevin worked in the web scraping industry for 10 years before co-founding ScrapingBee. He is also the author of the Java Web Scraping Handbook.