Taking a screenshot of your website is very straightforward using ScrapingBee. You can either take a screenshot of the visible portion of the page, the whole page, or an element of the page.

That can be done by specifying one of these parameters with your request:

screenshotto true or false.screenshot_full_pageto true or false.screenshot_selectorto the CSS selector of the element.

In this tutorial, we will see how to take a screenshot of ScrapingBee’s blog using the three methods.

1. Using screenshot parameter:

The code below will take a screenshot of the blog home page:

package main

import (

"fmt"

"io"

"log"

"net/http"

"os"

)

const API_KEY = "YOUR-API-KEY"

const SCRAPINGBEE_URL = "https://app.scrapingbee.com/api/v1"

func take_screenshot(target_url string, file_path string) (interface{}, error) {

req, err := http.NewRequest("GET", SCRAPINGBEE_URL, nil)

if err != nil {

return nil, fmt.Errorf("Failed to build the request: %s", err)

}

q := req.URL.Query()

q.Add("api_key", API_KEY)

q.Add("url", target_url)

q.Add("screenshot", "true")

req.URL.RawQuery = q.Encode()

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

return nil, fmt.Errorf("Failed to request ScrapingBee: %s", err)

}

defer resp.Body.Close()

if resp.StatusCode != http.StatusOK {

return nil, fmt.Errorf("Error request response with status code %d", resp.StatusCode)

}

bodyBytes, err := io.ReadAll(resp.Body)

file, err := os.Create(file_path)

if err != nil {

return nil, fmt.Errorf("Couldn't create the file ", err)

}

l, err := file.Write(bodyBytes) // Write content to the file.

if err != nil {

file.Close()

return nil, fmt.Errorf("Couldn't write content to the file ", err)

}

err = file.Close()

if err != nil {

return nil, fmt.Errorf("Couldn't close the file ", err)

}

return l, nil

}

func main() {

target_url := "https://www.scrapingbee.com"

saved_screenshot, err := take_screenshot(target_url, "./screenshot.png")

if err != nil {

log.Fatal(err)

}

fmt.Println(saved_screenshot)

}

As you’ll see below, the screenshot only covered the visible part of the scraper [1920x1080]. It didn’t cover the blog posts, or the footer.

So what affects the size of the image, and the visible parts of the page? The answer is the scraper’s default viewport. Its default width and height are 1920px and 1080px respectively. And to change them we’ll have to make the request with two additional parameters: window_width and window_height.

package main

import (

"fmt"

"io"

"log"

"net/http"

"os"

)

const API_KEY = "YOUR-API-KEY"

const SCRAPINGBEE_URL = "https://app.scrapingbee.com/api/v1"

func take_screenshot(target_url string, file_path string) (interface{}, error) {

req, err := http.NewRequest("GET", SCRAPINGBEE_URL, nil)

if err != nil {

return nil, fmt.Errorf("Failed to build the request: %s", err)

}

q := req.URL.Query()

q.Add("api_key", API_KEY)

q.Add("url", target_url)

q.Add("window_width", "1280") // Setting viewport's width

q.Add("window_height", "720") // Setting viewport's height

q.Add("screenshot", "true")

req.URL.RawQuery = q.Encode()

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

return nil, fmt.Errorf("Failed to request ScrapingBee: %s", err)

}

defer resp.Body.Close()

if resp.StatusCode != http.StatusOK {

return nil, fmt.Errorf("Error request response with status code %d", resp.StatusCode)

}

bodyBytes, err := io.ReadAll(resp.Body)

file, err := os.Create(file_path)

if err != nil {

return nil, fmt.Errorf("Couldn't create the file ", err)

}

l, err := file.Write(bodyBytes) // Write content to the file.

if err != nil {

file.Close()

return nil, fmt.Errorf("Couldn't write content to the file ", err)

}

err = file.Close()

if err != nil {

return nil, fmt.Errorf("Couldn't close the file ", err)

}

return l, nil

}

func main() {

target_url := "https://www.scrapingbee.com"

saved_screenshot, err := take_screenshot(target_url, "./screenshot.png")

if err != nil {

log.Fatal(err)

}

fmt.Println(saved_screenshot)

}

And as you will see, the image below is 1280x720 pixels.

2. Using screenshot_full_page parameter:

This parameter makes the request take a screenshot of the full page. Here’s how to use it:

package main

import (

"fmt"

"io"

"log"

"net/http"

"os"

)

const API_KEY = "YOUR-API-KEY"

const SCRAPINGBEE_URL = "https://app.scrapingbee.com/api/v1"

func take_screenshot_full(target_url string, file_path string) (interface{}, error) {

req, err := http.NewRequest("GET", SCRAPINGBEE_URL, nil)

if err != nil {

return nil, fmt.Errorf("Failed to build the request: %s", err)

}

q := req.URL.Query()

q.Add("api_key", API_KEY)

q.Add("url", target_url)

q.Add("screenshot_full_page", "true")

req.URL.RawQuery = q.Encode()

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

return nil, fmt.Errorf("Failed to request ScrapingBee: %s", err)

}

defer resp.Body.Close()

if resp.StatusCode != http.StatusOK {

return nil, fmt.Errorf("Error request response with status code %d", resp.StatusCode)

}

bodyBytes, err := io.ReadAll(resp.Body)

file, err := os.Create(file_path)

if err != nil {

return nil, fmt.Errorf("Couldn't create the file ", err)

}

l, err := file.Write(bodyBytes) // Write content to the file.

if err != nil {

file.Close()

return nil, fmt.Errorf("Couldn't write content to the file ", err)

}

err = file.Close()

if err != nil {

return nil, fmt.Errorf("Couldn't close the file ", err)

}

return l, nil

}

func main() {

target_url := "https://www.scrapingbee.com"

saved_screenshot, err := take_screenshot_full(target_url, "./screenshot.png")

if err != nil {

log.Fatal(err)

}

fmt.Println(saved_screenshot)

}

And the results as you’ll see, is a screenshot of the whole page. Large screenshot, click here to see it.

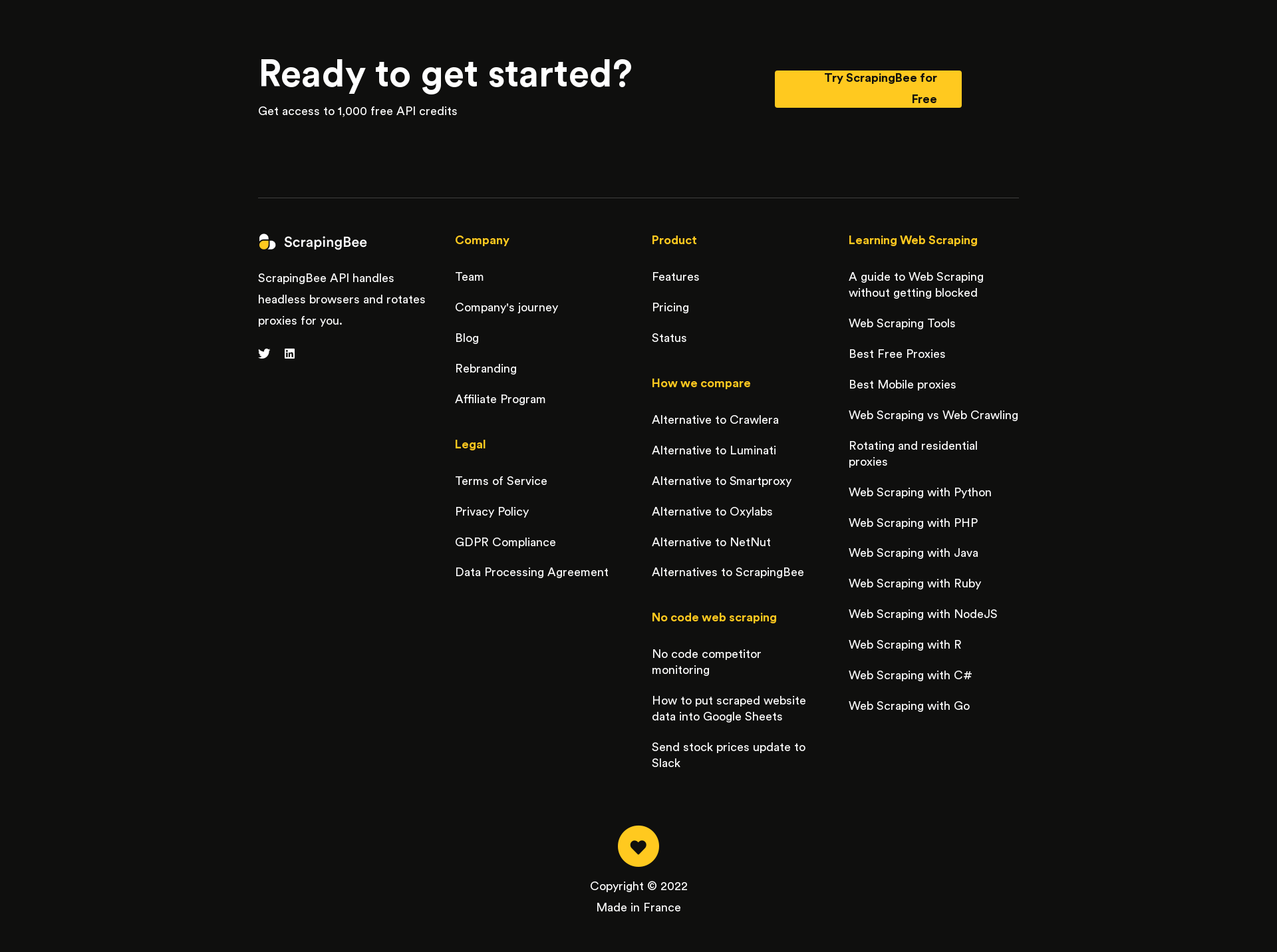

3. Using screenshot_selector parameter:

This parameter will take a screenshot of any HTML element on the page. All you have to do, is to specify the CSS selector of that element.

Let’s say that we wanted to take a screenshot of the footer section on this page. We will simply make our request with this additional parameter: screenshot_selector="footer".

So the code will look like this:

package main

import (

"fmt"

"io"

"log"

"net/http"

"os"

)

const API_KEY = "YOUR-API-KEY"

const SCRAPINGBEE_URL = "https://app.scrapingbee.com/api/v1"

func screenshot_element(target_url string, selector string, file_path string) (interface{}, error) {

req, err := http.NewRequest("GET", SCRAPINGBEE_URL, nil)

if err != nil {

return nil, fmt.Errorf("Failed to build the request: %s", err)

}

q := req.URL.Query()

q.Add("api_key", API_KEY)

q.Add("url", target_url)

q.Add("screenshot_selector", selector)

req.URL.RawQuery = q.Encode()

client := &http.Client{}

resp, err := client.Do(req)

if err != nil {

return nil, fmt.Errorf("Failed to request ScrapingBee: %s", err)

}

defer resp.Body.Close()

if resp.StatusCode != http.StatusOK {

return nil, fmt.Errorf("Error request response with status code %d", resp.StatusCode)

}

bodyBytes, err := io.ReadAll(resp.Body)

file, err := os.Create(file_path)

if err != nil {

return nil, fmt.Errorf("Couldn't create the file ", err)

}

l, err := file.Write(bodyBytes) // Write content to the file.

if err != nil {

file.Close()

return nil, fmt.Errorf("Couldn't write content to the file ", err)

}

err = file.Close()

if err != nil {

return nil, fmt.Errorf("Couldn't close the file ", err)

}

return l, nil

}

func main() {

target_url := "https://www.scrapingbee.com"

saved_screenshot, err := screenshot_element(target_url, "footer", "./sss.png")

if err != nil {

log.Fatal(err)

}

fmt.Println(saved_screenshot)

}

And the result is a screenshot of the footer as you can see: